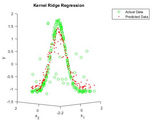

Kernel Ridge Regression

Refer to 6.2.2 Kernel Ridge Regression, An Introduction to Support Vector Machines and Other Kernel-based Learning Methods, Nello Cristianini and John Shawe-Taylor

Refer to 7.3.2 Kernel Methods for Pattern Analysis, John Shawe-Taylor University of Southampton, Nello Cristianini University of California at Davis

Kernel ridge regression (KRR) combines Ridge Regression (linear least squares with l2-norm regularization) with the kernel trick. It thus learns a linear function in the space induced by the respective kernel and the data. For non-linear kernels, this corresponds to a non-linear function in the original space.

The form of the model learned by Kernel Ridge is identical to support vector regression (SVR). However, different loss functions are used: KRR uses squared error loss while support vector regression uses ε-insensitive loss, both combined with l2 regularization. In contrast to SVR, fitting KernelRidge can be done in closed-form and is typically faster for medium-sized datasets. On the other hand, the learned model is non-sparse and thus slower than SVR, which learns a sparse model for ε > 0, at prediction-time. [http://scikit-learn.org/stable/modules/kernel_ridge.html]

Reference: (for SVR) https://in.mathworks.com/matlabcentral/fileexchange/63060-support-vector-regression

Cita come

Bhartendu (2026). Kernel Ridge Regression (https://it.mathworks.com/matlabcentral/fileexchange/63122-kernel-ridge-regression), MATLAB Central File Exchange. Recuperato .

Compatibilità della release di MATLAB

Compatibilità della piattaforma

Windows macOS LinuxCategorie

Tag

Community Treasure Hunt

Find the treasures in MATLAB Central and discover how the community can help you!

Start Hunting!Scopri Live Editor

Crea script con codice, output e testo formattato in un unico documento eseguibile.

| Versione | Pubblicato | Note della release | |

|---|---|---|---|

| 1.0.0.0 |