DeepAction

DeepAction

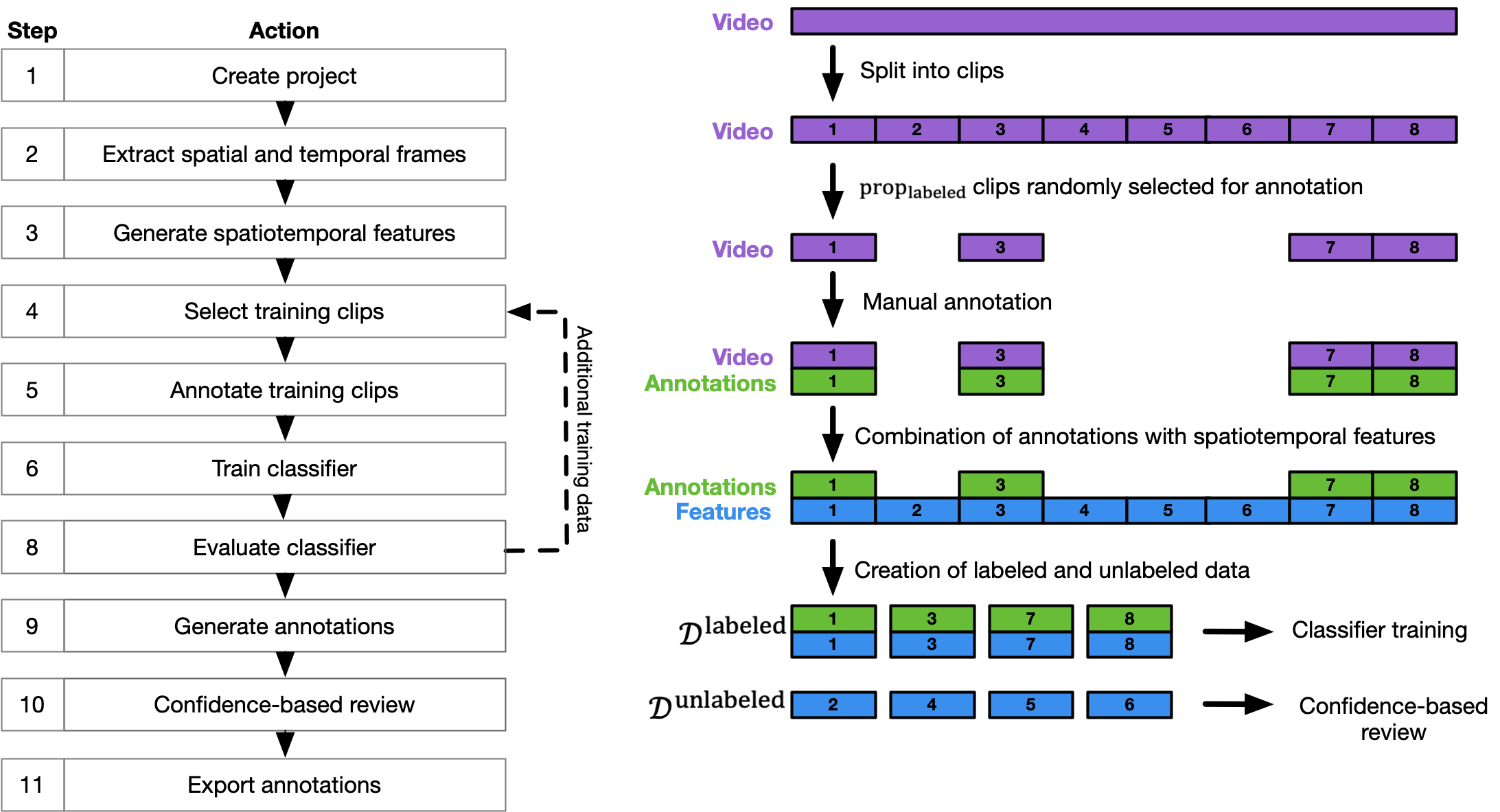

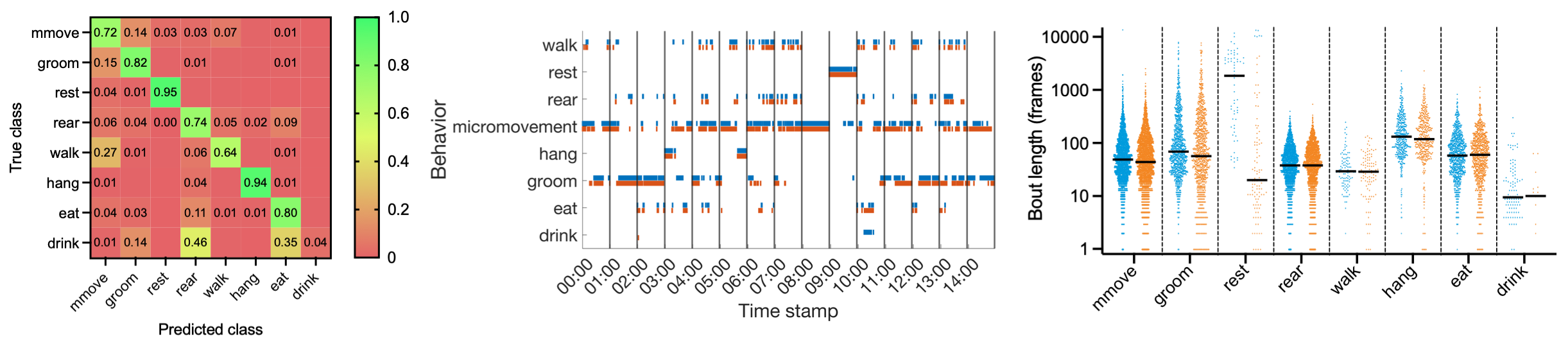

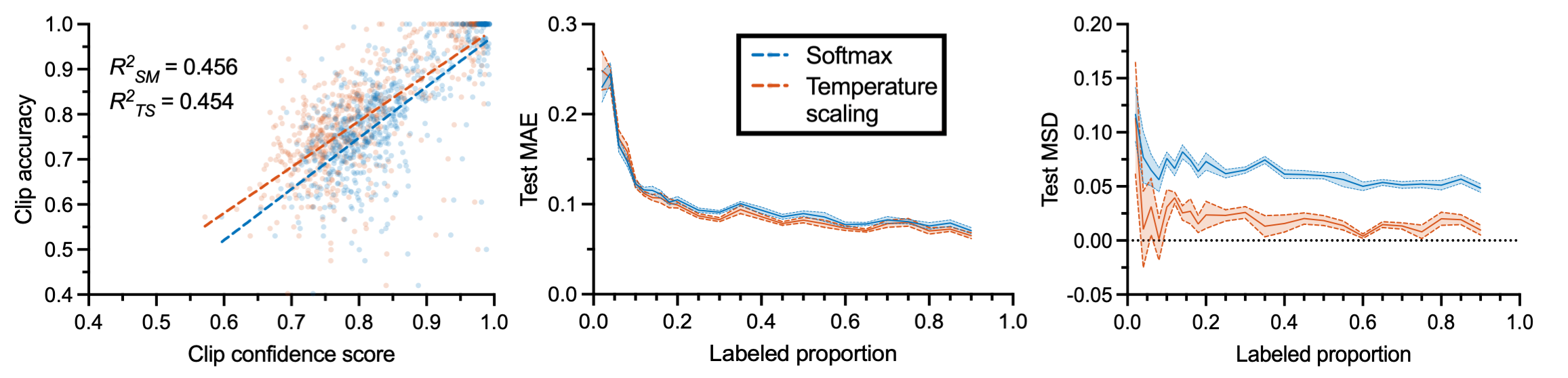

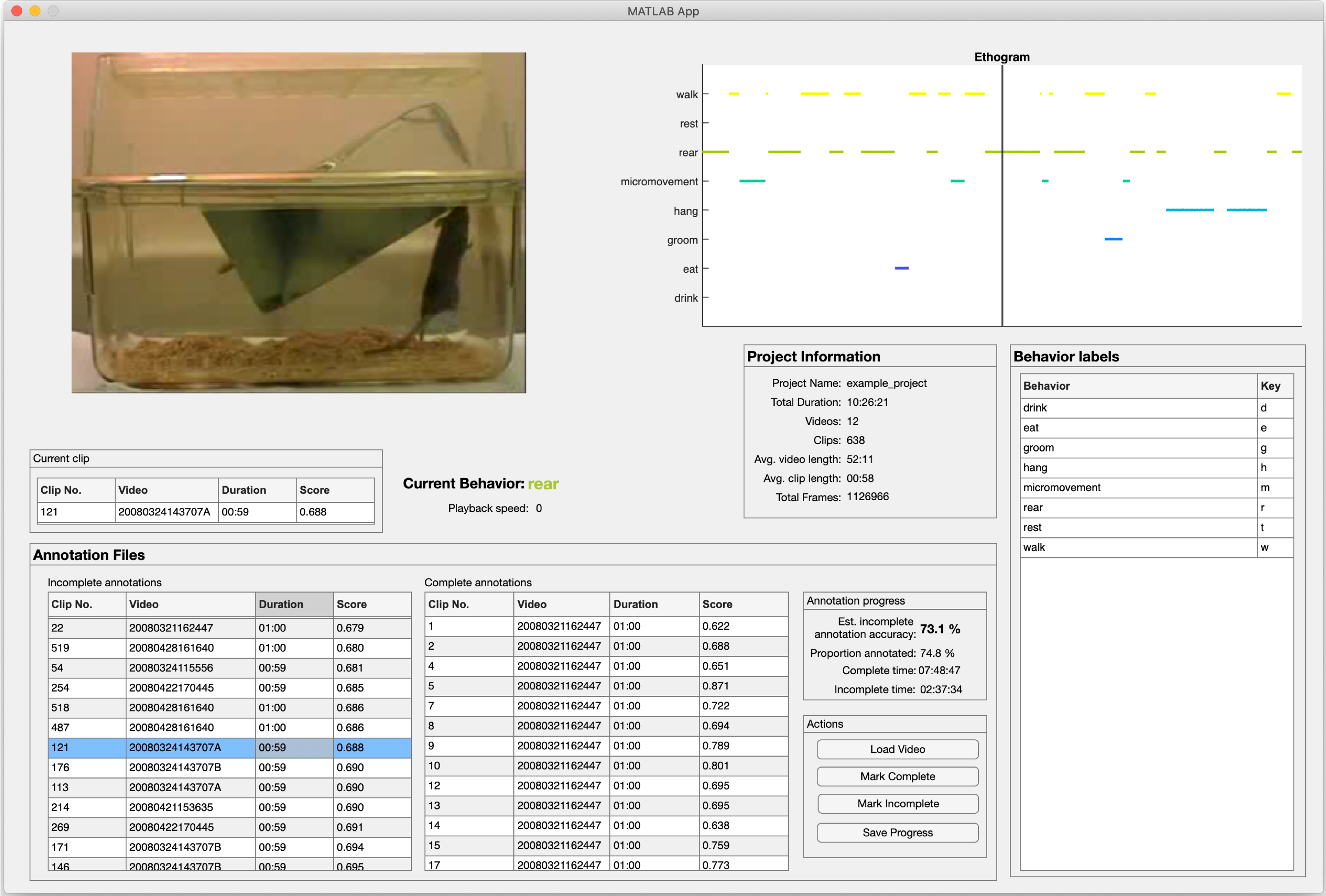

In this repository we provide the code and annotation GUI for DeepAction, a MATLAB toolbox for automatic annotation of animal behavior in video described in the preprint. Our method extracts features from video and uses them to train a birectional LSTM classifier, which in addition to predicting behavior generates a confidence score for the predicted label. These confidence scores allow for the selective review and correction of ambiguous annotations while omitting unnecessary review.

Included in this repository is:

- The code for the workflow

- The MATLAB app GUIs

- Project method, configuration file, and GUI documentation

- And two demonstration projects to cover the entire DeepAction pipeline

Table of contents

- Getting started

- Example projects

- Documentation

- Key folders & files

- References

- Release notes

- Author

- License

Getting started

Adding the toolbox to your MATLAB search path

All this is required to begin using DeepAction is to add the toolbox folder to your MATLAB search path. The command to do this is below:

addpath(genpath(toolbox_folder));

savepath;

where toolbox_folder is the path to the toolbox repository. The command savepath saves the current search path so the toolbox doesn't need to be added to the path each time a new MATLAB instance is opened. Don't include it if this is not desirable.

Example projects

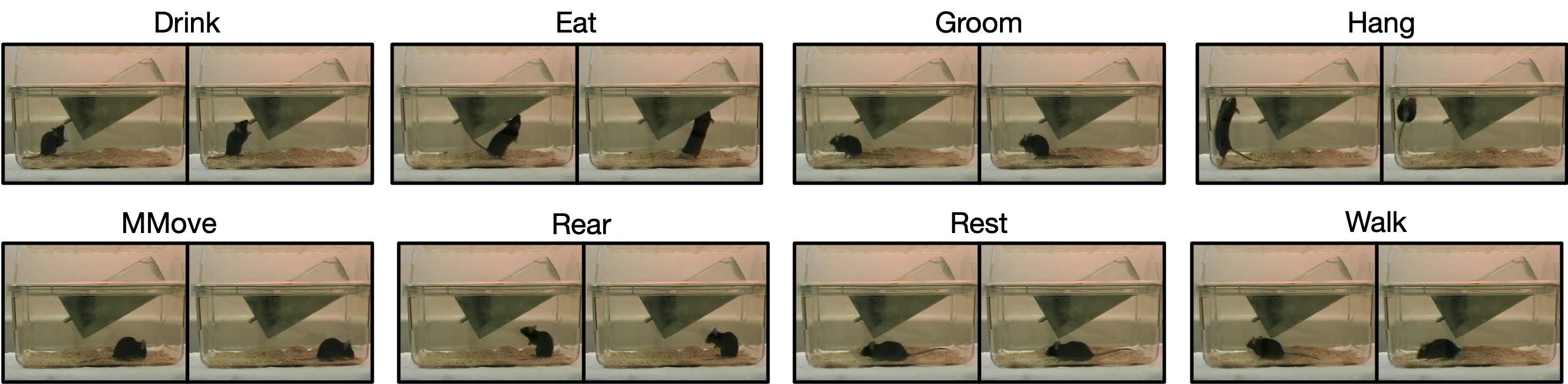

To demonstrate the toolbox, we run the workflow below using the home-cage dataset from Juang et al (see references). These demonstration projects are designed to familiarize users with the toolbox, while minimizing the time- and computationally-intensive components of the workflow (i.e., generating temporal frames and spatiotemporal features). Scripts can be found in the examples folder.

To do demonstrate different facets of the toolbox, we split the demonstration into two sets. In the first, we show how to create a project, extract features, and launch the annotator. The data for this project are a series of short clips (5 minutes each), selected to decrease the time required to generate frames and features. In the second project, we guide users through training and evaluating the classifier and confidence-based review, as well as launching the confidence-review annotator. For this project we provide pre-extracted features, we well as the corresponding annotations, for all the videos in the home-cage dataset.

Downloading the example datasets

The example data can be found via Google Drive link here. The contents of the example folder are as follows:

- The subfolder

project_1_videoscontains the demonstration videos that will be used in Project 1. - The subfolder

project_2contains the annotations, spatial/temporal features, and dimensionality reduction model for the entire home-cage dataset, which is used in Project 2. - The subfolder

example_annotationscontains the files used in the minidemonstration for converting existing annotations into a DeepAction-importable format.

Project 1: Workflow steps #1-5

In this project we provide a small number of short video clips from the home-cage dataset, and demonstrate steps 1-5 on the workflow below. The file to run this is demo_project_1.mlx in the examples folder of this repository. In this script, users:

- Initialize a new project

- Import a set of 5 shortened videos from the home-cage dataset

- Extract the spatial and temporal frames from the project videos

- Use the spatial and temporal frames to create spatial and temporal features

- Create a dimensionality reduction model

- Launch the annotator to familiarize with the annotation process

Project 2: Workflow steps #6-11

In the second set, we provide annotations as well as the spatial and temporal features needed to train the classifier, and guide users through the processes of training and evaluating the classifier and running the confidence-based review. The file to run this project is demo_project_2.mlx in the examples folder. Here, users:

- Load spatiotemporal features using the provided dimensionality reduction model

- Split annotated clips into training, validation, and test sets

- Train and evaluate the classifier

- Create confidence scores for each clip

- Launch the annotator to explore the confidence-based review GUI

- Export annotations

Mini-demonstration: Importing existing annotations into a DeepAction project

In addition, we also provide a demonstration of how to import annotations from a .csv file into a new DeepAction project. The code to run this demonstration can be found in the FormatAnnotations.mlx file in the examples folder.

Documentation

In the documentation folder, we provide markdown files containing descriptions for the configuration files, GUI, and DeepAction functions.

Key project folders & files

./project_folder/config.txt configuration file (see here)

./project_folder/annotations annotations for each video

./project_folder/videos raw video data imported into the project

./project_folder/frames spatial and temporal feames corresponding to the video data in videos

./project_folder/features spatial and temporal video features

./project_folder/rica_model dimensionality reduction models (only one model needs to be created for each stream/camera/dimensionality combination)

References

- Home-cage dataset - dataset used in demonstration projects. Also see: Jhuang, H., Garrote, E., Yu, X., Khilnani, V., Poggio, T., Steele, A. D., & Serre, T. (2010). Automated home-cage behavioural phenotyping of mice. Nature communications, 1(1), 1-10.

-

Piotr's toolbox - used for reading/writing

.seqfiles (and a version of this release is included in the./toolbox-mastersubfolder). - Dual TVL1 Optical Flow - used to estimate TV-L1 optical flow and create temporal frames

- CRIM13 dataset - used in the preprint (but not the example projects)

- EZGif.com - used to create GIF files from video

Release notes

As this is the initial release, we are expecting users might run into issues with the program. With this in mind, annotations are backed up each time the annotator is opened (so, if there's some sort of data loss/mistake/bug when using the annotator, or other components of the workflow that access annotation files, prior annotations can be restored from file). Please raise any issues on the issues page of the GitHub repository, and/or contact the author in the case of major problems. In the near future, the "to-do" items are:

- Releasing a multiple-camera example project and improving multiple-camera usability.

- Improved method documentation! (The main methods are covered in functions.md, but full details and sufficient in-code documentation is incomplete.)

- Improving the annotator video viewer to reduce lag.

- ... and quite a few other miscellaneous items

If you're interested in contributing, please reach out!

Author

All code, except that used in references, by Carl Harris (email: carlwharris1 at gmail; website; GitHub)

If you use this toolbox in your work, we ask that you cite the original paper:

@article{harris2023deepaction,

title={DeepAction: A MATLAB toolbox for automated classification of animal behavior in video},

author={Harris, Carl and Finn, Kelly R and Kieseler, Marie-Luise and Maechler, Marvin R and Tse, Peter U},

journal={Scientific Reports},

volume={13},

number={1},

pages={2688},

year={2023},

publisher={Nature Publishing Group UK London}

}

License

This project is licensed under the MIT License - see the LICENSE file for details

Cita come

Carl Harris (2024). DeepAction (https://github.com/carlwharris/DeepAction), GitHub. Recuperato .

Harris, Carl, et al. DeepAction: A MATLAB Toolbox for Automated Classification of Animal Behavior in Video. Cold Spring Harbor Laboratory, June 2022, doi:10.1101/2022.06.20.496909.

Compatibilità della release di MATLAB

Compatibilità della piattaforma

Windows macOS LinuxCategorie

Tag

Community Treasure Hunt

Find the treasures in MATLAB Central and discover how the community can help you!

Start Hunting!Scopri Live Editor

Crea script con codice, output e testo formattato in un unico documento eseguibile.

DeepAction/@Annotation

DeepAction/@AnnotationKeys

DeepAction/@ConfidenceScorer

DeepAction/@ConfigFile

DeepAction/@DeepActionProject

DeepAction/@Feature

DeepAction/Annotator

DeepAction/Classes

DeepAction/functions

DeepAction/functions/Optical Flow

DeepAction/functions/Train Network

examples

tests

toolbox-master/matlab

toolbox-master/videos

DeepAction/Classes

examples

Le versioni che utilizzano il ramo predefinito di GitHub non possono essere scaricate

| Versione | Pubblicato | Note della release | |

|---|---|---|---|

| 1.0 |

|