Performance

Some of the most common reasons why GPU Coder™ generated code is not performing as expected are:

CUDA® kernels are not created.

Host to device and device to host memory transfers (

cudaMemcpy) are throttling performance.Not enough parallelism or device issues.

These topics elaborate on the common causes for these symptoms and describe how to utilize the built-in screener to detect these issues. You can find information on how to work around for these issues and generate more efficient CUDA code.

Apps

Functions

Objects

Topics

- Code Generation Reports

Create and view reports generated during code generation.

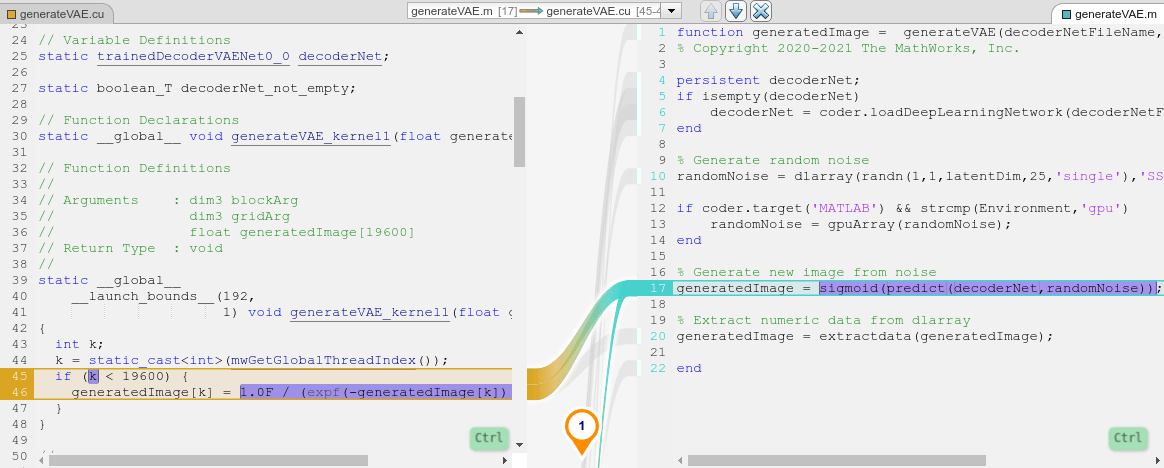

- Trace Between Generated CUDA Code and MATLAB Source Code

Highlight sections of MATLAB® code that runs on the GPU.

- Generating a GPU Code Metrics Report for Code Generated from MATLAB Code

Create and explore GPU static code metrics report.

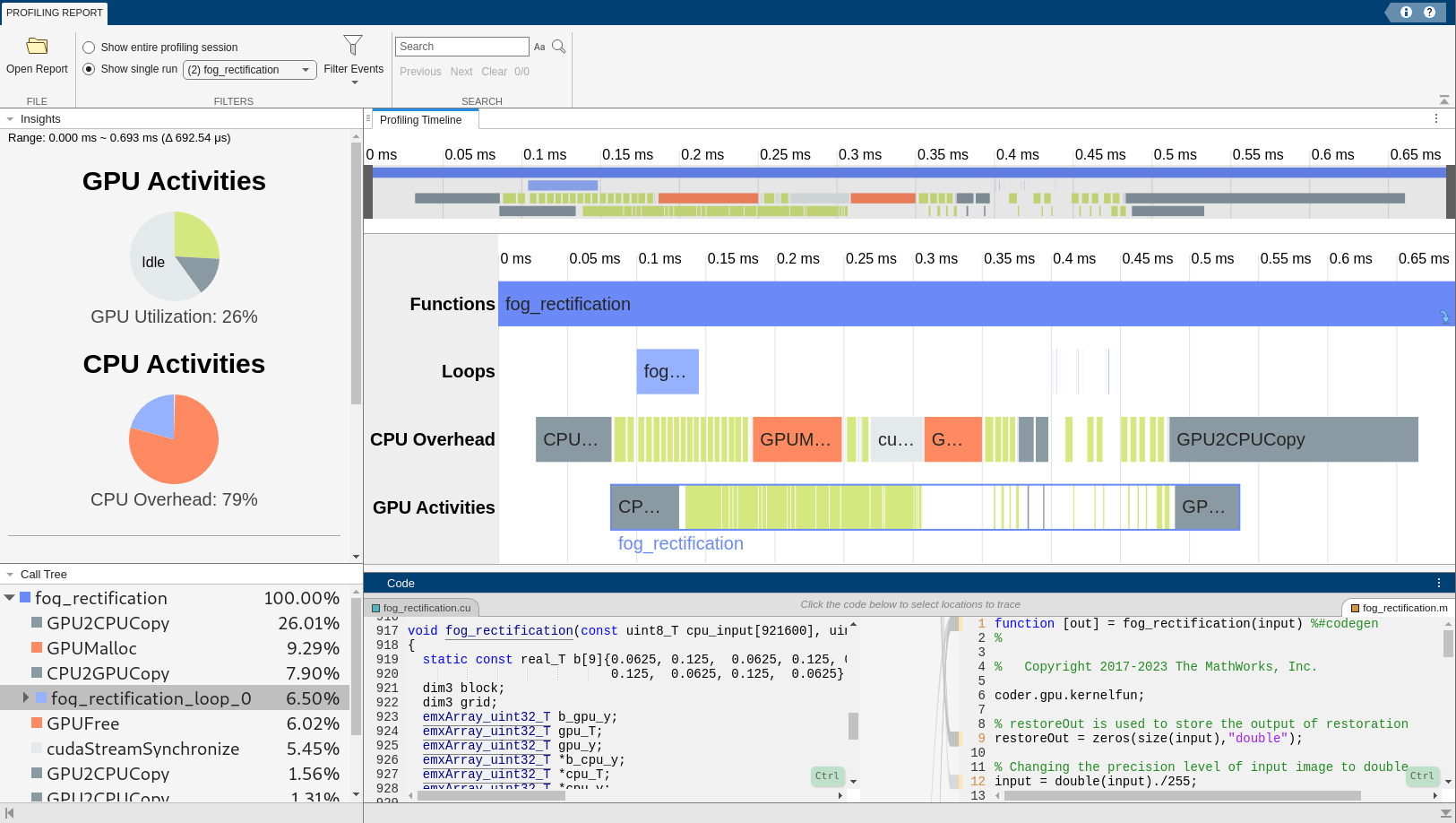

- GPU Performance Analyzer

Visualize code metrics and identify optimization and tuning opportunities in your code.

- Analyzing Network Performance Using the Deep Learning Dashboard

Investigate the performance of deep learning networks and layers in generated code using the Deep Learning Dashboard. (Since R2025a)

- Kernel Analysis

Recommendations for generating efficient CUDA kernels.

- Memory Bottleneck Analysis

Reduce memory bottleneck issues when using GPU Coder.

- Optimize Kernels That Contain Loops

Rewrite loops in MATLAB to avoid generated code kernels that contain loops. (Since R2025a)

- Prevent Kernel Launches Inside Loops

Parallelize loops that launch kernels to execute them on the GPU. (Since R2025a)

- Minimize Memory Copy Events in Generated Code Loops

Rewrite loops to minimize the number of data transfers between the CPU and GPU in generated CUDA code. (Since R2025a)