Run Tests in Multiple Releases of MATLAB

If you have more than one release of MATLAB® installed, you can run tests in multiple releases. Starting with R2011b, you can also run tests in releases that do not have Simulink® Test™. Running tests in multiple releases enables you to use test functionality from later releases while running the tests in your preferred release of Simulink. If you have code that was generated in a previous release, you can verify that the model in the current release continues to work as expected. Also, in the current release you can create a test harness using the previously generated code, rather than having to regenerate it. Comparing test results across multiple releases can help you to better understand Simulink changes before upgrading to a new version of MATLAB and Simulink.

Code that was generated in a previous release to verify that the model in the current release continues to work as expected. In the current release you can create a test harness using the previously generated code, rather than having to regenerate it.

Although you can run test cases on models in previous releases, the release you run the test in must support the features of the test. For example, if your test involves test harnesses or test sequences, the release must support those features for the test to run.

Before you can create tests that use additional releases, add the releases to your list of available releases using Test Manager preferences. See Add Releases Using Test Manager Preferences.

Considerations for Testing in Multiple Releases

Testing Models in Previous or Later Releases

Your model or test harness must be compatible with the MATLAB version running your test.

If you have a model created in a newer version of MATLAB, to test the model in a previous version of MATLAB, export the model to a previous version and simulate the exported model with the previous MATLAB version. For more information, see the information on exporting a model in Save Models.

To test a model in a more recent version of MATLAB, consider using the Upgrade Advisor to upgrade your model for the more recent release. For more information, see Upgrade Models Using Upgrade Advisor.

Test Case Compatibility with Previous Releases

When collecting coverage in multiple-release tests, you can run test cases up to three years (six releases) prior to the current release. Tests that contain logical or temporal assessments are supported in R2016b and later releases.

Multiple-release testing for test cases with fault settings is supported in R2024b and later. When you select releases for simulation for a test case with fault settings, you can select R2023b or later.

Test Case Limitations with Multiple Release Testing

Certain features are not supported for multiple-release testing:

Parallel test execution

MATLAB Unit Test framework test cases

Real-time tests

Models with observers

Input data defined in an external Excel® document

Custom figures from test case callbacks

Variant configurations

Add Releases Using Test Manager Preferences

Before you can create tests for multiple releases, use Test Manager preferences to include the MATLAB release you want to test in. You can also delete a release that you added to the available releases list. However, you cannot delete the release from which you are running Test Manager.

In the Test Manager, click Preferences.

In the Preferences dialog box, click Release. The Release pane lists the release you are running Test Manager from.

In the Release pane, click Add/Remove releases to open the Release Manager.

In the Release Manager, click Add.

Browse to the location of the MATLAB release you want to add and click OK.

To change the release name that will appear in the Test Manager, edit the Name field.

Close the Release Manager. The Preferences dialog box shows the selected releases. Deselect releases you do not want to make available for running tests.

Run Baseline Tests in Multiple Releases

When you run a baseline test with the Test Manager set up for multiple releases, you can:

Create the baseline in the release you want to see the results in, for example, to try different parameters and apply tolerances.

Create the baseline in one release and run it in another release. Using this approach you can, for example, know whether a newer release produces the same simulation outputs as an earlier release.

Create the baseline.

Make sure that the release has been added to your Test Manager preferences.

Create a test file, if necessary, and add a baseline test case to it.

Select the test case.

Under System Under Test, enter the name of the model you want to test.

Set up the rest of the test.

Capture the baseline. Under Baseline Criteria, click Capture. Specify the format and file in which to save the baseline and select the release in which to capture the baseline. Then, click Capture to simulate the model.

For more information about capturing baselines, see Capture Baseline Criteria.

After you create the baseline, run the test in the selected releases. Each release you selected generates a set of results.

In the test case, expand Simulation Setting and Release Overrides and, in the Select releases for simulation drop-down menu, select the releases you want to use to compare against your baseline.

Specify the test options.

From the toolstrip, click Run.

For each release that you select when you run the test case, the pass-fail results appear in the Results and Artifacts pane. For results from a release other than the one you are running Test Manager from, the release number appears in the name.

Run Equivalence Tests in Multiple Releases

When you run an equivalence test, you compare two simulations. Each simulation runs in a single release, which can be the same or different. Examples of equivalence tests include comparing models run in different model simulation modes, such as normal and software-in-the-Loop (SIL), or comparing different tolerance settings.

Make sure that the releases have been added to your Test Manager preferences.

Create a test file, if necessary, and add an equivalence test case to it.

Select the test case.

Under Simulation 1, System Under Test, enter the name of the model you want to test.

Expand Simulation Setting and Release Overrides and, in the Select releases for simulation drop-down menu, select the release for Simulation 1 of the equivalence test. For an equivalence test, only one release can be selected for each simulation.

Set up the rest of the test.

Repeat steps 4 through 6 for Simulation 2.

In the toolstrip, click Run.

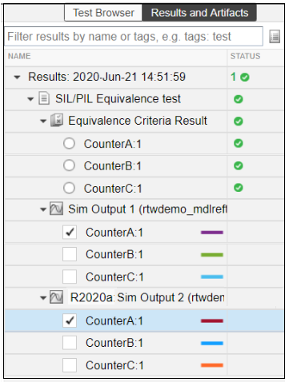

The test runs each simulation in the release you selected and compares the results for equivalence. For each release that you selected when you ran the test case, the pass-fail results appear in the Results and Artifacts pane. For results from a release other than the one you are running Test Manager from, the release number appears in the name.

Run Simulation Tests in Multiple Releases

Running a simulation test simulates the model in each release you select using the criteria you specify in the test case.

Make sure that the releases have been added to your Test Manager preferences.

Create a test file, if necessary, and add a simulation test case template to it.

Select the test case.

Under System Under Test, enter the model you want to test.

Expand Simulation Setting and Release Overrides and, in the Select releases for simulation drop-down menu, select the release options for the simulation.

Under Simulation Outputs, select the signals to log.

In the toolstrip, click Run.

The test runs, simulating for each release you selected. For each release, the pass-fail results appear in the Results and Artifacts pane. For results from a release other than the one you are running Test Manager from, the release number appears in the name.

Assess Temporal Logic in Multiple Releases

You can run tests that contain logical and temporal assessments in multiple releases to test signal logic for models created in an earlier release. You can also compare assessment results across releases when you run the tests in multiple releases. For more information, see Assess Temporal Logic by Using Temporal Assessments.

You can run these test case types with logical and temporal assessments:

Baseline tests

Equivalence tests

Simulation tests

Run Tests with Logical and Temporal Assessments

To run tests logic with logical and temporal assessments in multiple releases:

Start MATLAB R2021b or later.

Open the Test Manager. For more information, see Open the Simulink Test Manager.

In the Test Manager, add the releases to your Test Manager preferences. For more information, see Add Releases Using Test Manager Preferences.

Create a new test file with a baseline, equivalence, or simulation test case, or open an existing one. For more information, see:

In the Test Manager, specify your test case properties, including the system under test and other properties that you want to apply. For more information, see Specify Test Properties in the Test Manager.

Add a logical or temporal assessment to your test case. For more information, see Assess Temporal Logic by Using Temporal Assessments and Logical and Temporal Assessment Syntax.

Select the releases to run the test in. In the Test Manager, select your test case. In System Under Test, under Simulation Settings and Release Overrides, next to Select releases for simulation, select the releases to run the test case in from the list.

If you are using a baseline or simulation test case, you can run the test in multiple releases in a single run by selecting multiple releases from the list. If you are using an equivalence test case, you can select one release under Simulation 1 and another release under Simulation 2. For more information, see:

Run the test. In the Test Manager, click Run.

Evaluate Assessment Results

The Results and Artifacts pane displays the test results for each release you selected. The test release appears in the name of each test result from a release other than the version you ran Test Manager from.

You can evaluate the assessment results independently from other pass-fail criteria. For example, while a baseline test case might fail due to a failing baseline criteria, a logical or temporal assessment in the test case might pass.

You can also examine detailed assessment signal behavior. For more information, see View Assessment Results.

Collect Coverage in Multiple-Release Tests

To add coverage collection for multiple releases, you must have a Simulink Coverage™ license. Set up your test as described in Run Baseline Tests in Multiple Releases, Run Equivalence Tests in Multiple Releases, or Run Simulation Tests in Multiple Releases. You can use external test harnesses to increase coverage for multiple-release tests. Before you capture the baseline or run the equivalence or simulation test, enable coverage collection.

Click the test file that contains your test case. To collect coverage for test suites or test cases, you must enable coverage at the test file level.

In the Coverage Settings section, select Record coverage for system under test, Record coverage for referenced models, or both.

Select the types of coverage to collect under Coverage Metrics to collect.

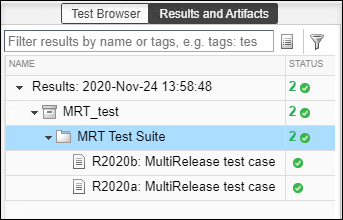

After you run the test, the Results and Artifacts pane shows the pass-fail results for each release in the test suite.

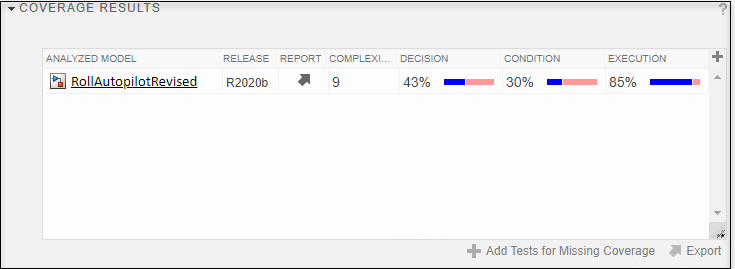

To view the coverage results for a release, select its test case and expand the Coverage Results section. The table lists the model, release, and the coverage percentages for the metrics you selected.

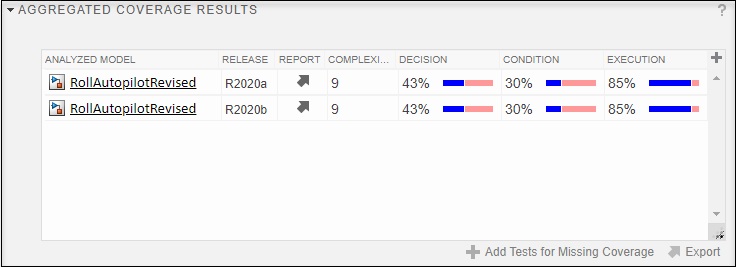

To view aggregated coverage results for the releases in your test, select the test suite that contains the releases and expand the Aggregated Coverage Results section.

To use the current release to add tests for missing coverage to an older release, click the row and click Add Tests for Missing Coverage. You can also use coverage filters, generate reports, merge results, import and export results, and scope coverage to linked requirements. For more information, see Collect Coverage in Tests and Increase Test Coverage for a Model.

See Also

sltest.testmanager.getpref | sltest.testmanager.setpref