Specify Training Options in Reinforcement Learning Designer

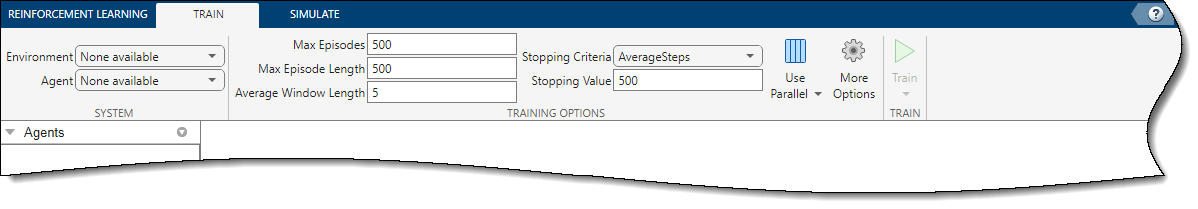

To configure the training of an agent in the Reinforcement Learning Designer app, specify training options on the Train tab.

Specify Basic Options

On the Train tab, you can specify these basic training options.

| Option | Description |

|---|---|

| Max Episodes | Maximum number of episodes to train the agent, specified as a positive integer. |

| Max Episode Length | Maximum number of steps to run per episode, specified as a positive integer. |

| Stopping Criteria | Training termination condition, specified as one of the following values:

|

| Stopping Value | Critical value of the training termination condition in Stopping Criteria, specified as a scalar. |

| Average Window Length | Window length for averaging the scores, rewards, and number of steps for the agent when either Stopping Criteria or Save agent criteria specify an averaging condition. |

Specify Parallel Training Options

To enable the use of multiple processes for training, on the Train

tab, click the Use Parallel button ![]() . Training agents using parallel computing requires

Parallel Computing Toolbox™ software. For more information, see Train Agents Using Parallel Computing and GPUs.

. Training agents using parallel computing requires

Parallel Computing Toolbox™ software. For more information, see Train Agents Using Parallel Computing and GPUs.

To specify options for parallel training, select Use Parallel > Parallel training options.

In the Parallel Training Options dialog box, you can specify these training options.

| Option | Description |

|---|---|

| Enable parallel training | Enables using multiple processes to perform environment simulations

during training. Select this option by clicking the Use

Parallel button |

| Parallel computing mode | Parallel computing mode, specified as one of these values:

|

| Transfer workspace variables to workers | Select this option to send model and workspace variables to parallel workers. When you select this option, the parallel pool client sends variables used in models and defined in the MATLAB® workspace to the workers. |

| Random seed for workers | Randomizer initialization for workers, specified as one of these values:

|

| Files to attach to parallel pool | Additional files to attach to the parallel pool. Specify names of files in the current working directory, with one name on each line. |

| Worker setup function | Function to run before training starts, specified as a handle to a function having no input arguments. This function runs once for each worker before training begins. Write this function to perform any processing that you need prior to training. |

| Worker cleanup function | Function to run after training ends, specified as a handle to a function having no input arguments. You can write this function to clean up the workspace or perform other processing after training terminates. |

This figure shows an example parallel training configuration for these files and functions:

Data file attached to the parallel pool —

workerData.matWorker setup function —

mySetup.mWorker cleanup function —

myCleanup.m

For more information on parallel training options, see the

UseParallel and ParallelizationOptions

properties in rlTrainingOptions.

For more information on parallel training, see Train Agents Using Parallel Computing and GPUs.

Specify Agent Evaluation Options

To enable agent evaluation at regular intervals during training, on the

Train tab, click ![]() .

.

To specify agent evaluation options, select Evaluate Agent > Agent evaluation options.

In the Agent Evaluation Options dialog box, you can specify these training options.

| Option | Description |

|---|---|

| Enable agent evaluation | Enables periodic agent evaluation during training. You can select this

option by clicking the Evaluate Agent button |

| Number of evaluation episodes | Number of consecutive evaluation episodes, specified as a positive integer. After running the number of consecutive training episodes specified in Evaluation frequency, the software runs the number of evaluation episodes specified in this field, consecutively. For

example, if you specify The default is value |

| Evaluation frequency | Evaluation period, specified as a positive integer. This value is the

number of consecutive training episodes after which the number of consecutive

evaluation episodes specified in the Number of evaluation

episodes field run. For example, if you specify

|

| Max evaluation episode length | Maximum number of steps to run for an evaluation episode, specified as a positive integer. This value is the maximum number of steps to run for an evaluation episode if other termination conditions are not met. To accurately assess the agent stability and performance, it is often useful to specify a larger number of steps for an evaluation episode than for a training episode. If you leave this field empty (default), the maximum number of steps per episode specified in the Max Episode Length field is used. |

| Evaluation random seeds | Random seeds used for evaluation episodes, specified as follows:

The current random seed used for training is stored before the first episode of an evaluation sequence and reset as the current seed after the evaluation sequence. This behavior ensures that the training results with evaluation are the same as the results without evaluation. |

| Evaluation statistic type | Type of evaluation statistic for each group of consecutive evaluation episodes, specified as one of these strings:

This value is returned, in the training result object, as the

element of the |

| Use exploration policy | Option to use exploration policy during evaluation episodes. When this option is disabled (default) the agent uses its base greedy policy when selecting actions during an evaluation episode. When you enable this option, the agent uses its base exploration policy when selecting actions during an evaluation episode. |

For more information on evaluation options, see rlEvaluator.

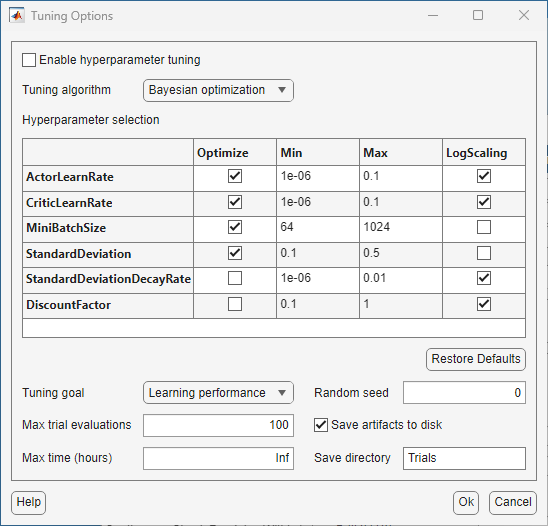

Specify Hyperparameter Tuning Options

Hyperparameter tuning consists in a series of training experiments, also referred to as

trials, designed to select the best combination of hyperparameters. To enable a

hyperparameter tuning session, on the Train tab, click on the

Tune Hyperparameters

![]() button.

button.

To specify hyperparameter tuning options, select Tune Hyperparameters > Hyperparameter tuning options.

In the Tuning Options dialog box, you can specify these training options:

| Option | Description |

|---|---|

| Enable hyperparameter tuning | Enables hyperparameter tuning. This option gets selected also when you

click on the Tune Hyperparameters

|

| Tuning algorithm | Tuning algorithm, specified as one of these values:

|

| Hyperparameter selection | The Hyperparameter selection section allows you to select up to six parameters to be tuned, depending on the agent type. You can tune the learning rates used by the critic and, if available, the actor, mini-batch size, discount factor. You can also tune exploration parameters such as the action noise standard deviation and its decay rate or the epsilon decay rate and its decay rate. You can enable the tuning of a hyperparameter by selecting the corresponding Optimize check box. You can then adjust the hyperparameter search range using the corresponding Min and Max fields. Check Log Scaling to space the grid points logarithmically for the search dimension corresponding to the hyperparameter. For more information about the hyperparameter that you can tune in the app and their default range, see Hyperparameter Selection. |

| Restore Defaults | Click this button to reset the hyperparameter configuration. |

| Tuning goal | Tuning goal, specified as one of these values:

|

| Random seed | Initial random seeds used for each hyperparameter tuning training run, specified as a nonnegative integer. |

| Max trial evaluations | Maximum number of trials (training experiments). When the number of completed trials reaches this value, the software selects the combination of hyperparameters that yields the best score. |

| Max time (hours) | Maximum time in hours to perform tuning. When the time elapsed from the beginning of the hyperparameter tuning session reaches this value, the software does not perform additional training experiments. The software then selects the combination of hyperparameters that yield the best score. |

| Save artifacts to disk | Enable or disable saving artifacts, (that is, the tuned agent and training result) to disk. |

| Save directory | Directory in which the software saves the artifacts. |

Hyperparameter Selection

The hyperparameter selection section of the hyperparameter Tuning Options dialog box allows you to tune these hyperparameters.

| Hyperparameter | Description | Optimize (default value) | Min (default value) | Max (default value) | LogScaling (default value) |

|---|---|---|---|---|---|

| ActorLearnRate | Actor learning rate, available for all agents except DQN and TRPO. For more information, see the optimizer options object of the actor used by the agent. | selected | 1e-6 | 0.1 | selected |

| CriticLearnRate | Critic learning rate, available for all agents. For more information, see the optimizer options object of the critic used by the agent. | selected | 1e-6 | 0.1 | selected |

| ExperienceHorizon | Number of steps used to calculate the advantage value, available for PPO and

TRPO agents. For more information, see ExperienceHorizon. | selected | 50 | 1000 | not selected |

| MiniBatchSize | Size of the mini-batch, available for all agents. For more information, see the agent options object. | selected | 64 | 1024 | not selected |

| EntropyLossWeight | Weight of the entropy loss, available for PPO and TRPO agents. For more

information, see EntropyLossWeight. | selected | 0.001 | 0.1 | selected |

| DiscountFactor | Discount factor, available for all agents. For more information, see the agent options. | not selected | 0.1 | 1 | selected |

| Epsilon | Value of the epsilon variable, for epsilon-greedy exploration, available for

DQN agents. For more information, see EpsilonGreedyExploration. | selected | 0.5 | 1 | not selected |

| EpsilonDecay | Decay rate of the epsilon variable, for epsilon-greedy exploration, available

for DQN agents. For more information, see EpsilonGreedyExploration. | not selected | 1e-6 | 0.01 | selected |

| MeanAttractionConstant | Value of the standard deviation for the agent noise model, available for DDPG

agents. For more information, see NoiseOptions. | not selected | 0.01 | 1 | selected |

| Standard Deviation | Value of the standard deviation for the agent noise model, available for DDPG

and TD3 agents. For more information, see NoiseOptions. | selected | 0.1 | 0.5 | not selected |

| StandardDeviationDecayRate | Decay rate of the standard deviation for the agent noise model, available for

DDPG and TD3 agents. For more information, see NoiseOptions. | not selected | 1e-6 | 0.01 | selected |

Note

You can tune a set of hyperparameters that is different from the set allowed in the Hyperparameter tuning options dialog box using the MATLAB code generated by the app. To do so, generate code by selecting Train > Generate MATLAB function for hyperparameter tuning. Then, customize the generated code to include the hyperparameters to tune.

For an example on how to tune hyperparameters in Reinforcement Learning Designer, see Tune Hyperparameters Using Reinforcement Learning Designer. For an example on how to perform parameter sweeping using the Experiment Manager app, see Train Agent or Tune Environment Parameters Using Parameter Sweeping. For an general example on how to tune a custom set of hyperparameters programmatically, using Bayesian optimization, see Tune Hyperparameters Using Bayesian Optimization.

Specify Additional Options

To specify additional training options, on the Train tab, click More Options.

In the More Training Options dialog box, you can specify these options.

| Option | Description |

|---|---|

| Save agent criteria | Condition for saving agents during training, specified as one of these values:

|

| Save agent value | Critical value of the save agent condition in Save agent

criteria, specified as a scalar or "none". |

| Save directory | Folder for saved agents. If you specify a folder name and that folder does not exist, the app creates the folder in the current working directory. To interactively select a folder, click Browse. |

| Show verbose output | Select this option to display the training progress at the command line. |

| Stop on error | Select this option to stop training when an error occurs during an episode. |

For more information training options, see rlTrainingOptions.

See Also

Apps

Functions

Objects

Topics

- Design and Train Agent Using Reinforcement Learning Designer

- Specify Simulation Options in Reinforcement Learning Designer

- Create Agents Using Reinforcement Learning Designer

- Reinforcement Learning Agents

- Create Policies and Value Functions

- Train Reinforcement Learning Agents

- Train Agents Using Parallel Computing and GPUs