updateMetricsAndFit

Update performance metrics in kernel incremental learning model given new data and train model

Since R2022a

Description

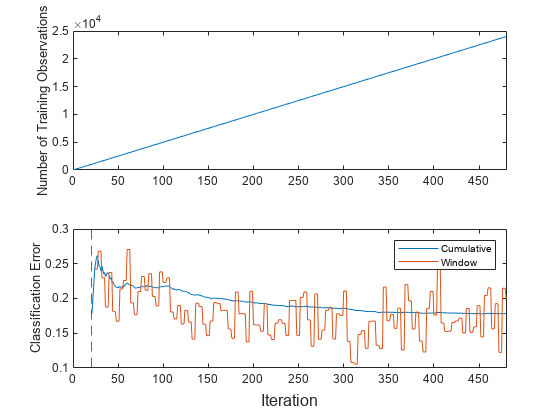

Given streaming data, updateMetricsAndFit first evaluates the

performance of a configured incremental learning model for kernel regression (incrementalRegressionKernel object) or binary kernel classification (incrementalClassificationKernel object) by calling updateMetrics on

incoming data. Then updateMetricsAndFit fits the model to that data by calling

fit. In other words,

updateMetricsAndFit performs prequential evaluation

because it treats each incoming chunk of data as a test set, and tracks performance metrics

measured cumulatively and over a specified window [1].

updateMetricsAndFit provides a simple way to update model performance metrics

and train the model on each chunk of data. Alternatively, you can perform the operations

separately by calling updateMetrics and then fit,

which allows for more flexibility (for example, you can decide whether you need to train the

model based on its performance on a chunk of data).

Mdl = updateMetricsAndFit(Mdl,X,Y)Mdl, which is the input incremental learning model Mdl with the following modifications:

updateMetricsAndFitmeasures the model performance on the incoming predictor and response data,XandYrespectively. When the input model is warm (Mdl.IsWarmistrue),updateMetricsAndFitoverwrites previously computed metrics, stored in theMetricsproperty, with the new values. Otherwise,updateMetricsAndFitstoresNaNvalues inMetricsinstead.updateMetricsAndFitfits the modified model to the incoming data by following this procedure:

The input and output models have the same data type.

Examples

Input Arguments

Output Arguments

Algorithms

References

Version History

Introduced in R2022a

See Also

Objects

Functions

Topics

- Incremental Learning Overview

- Configure Incremental Learning Model

- Implement Incremental Learning for Classification Using Succinct Workflow

- Initialize Incremental Learning Model from Logistic Regression Model Trained in Classification Learner

- Initialize Incremental Learning Model from SVM Regression Model Trained in Regression Learner