Define Custom Deep Learning Output Layers

Tip

Custom output layers are not recommended, use the trainnet

function and specify a custom loss function instead. To specify a custom backward function

for the loss function, use a deep.DifferentiableFunction object. For more

information, see Define Custom Deep Learning Operations.

This topic explains how to define custom deep learning output layers for your tasks when

you use the trainNetwork function. For a list of built-in layers in

Deep Learning Toolbox™, see List of Deep Learning Layers.

To learn how to define custom intermediate layers, see Define Custom Deep Learning Layers.

If Deep Learning Toolbox does not provide the output layer that you require for your task, then you can define your own custom layer using this topic as a guide. After defining the custom layer, you can check that the layer is valid and GPU compatible, and outputs correctly defined gradients.

Output Layer Architecture

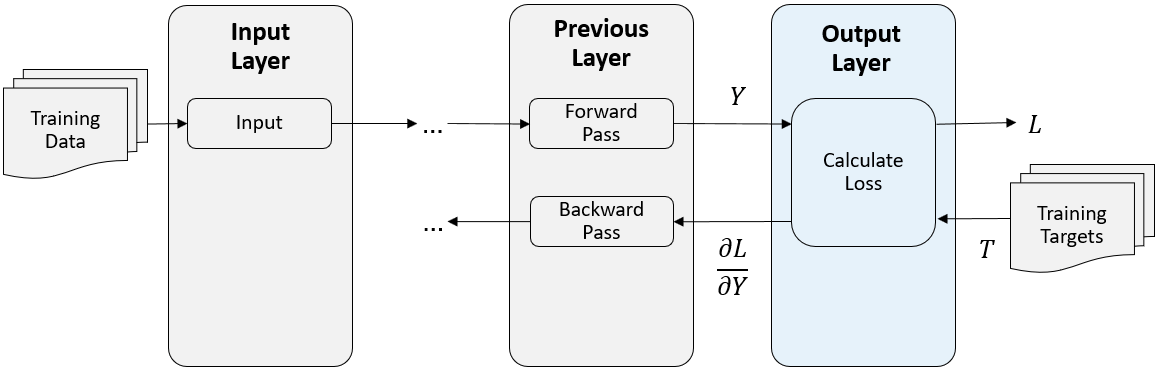

At the end of a forward pass at training time, an output layer takes the predictions (network outputs) Y of the previous layer and calculates the loss L between these predictions and the training targets. The output layer computes the derivatives of the loss L with respect to the predictions Y and outputs (backward propagates) results to the previous layer.

The following figure describes the flow of data through a convolutional neural network and an output layer.

Output Layer Templates

To define a custom output layer, use one of these class definition templates. The templates give the structure of an output layer class definition. They outline:

The optional

propertiesblocks for the layer properties. For more information, see Output Layer Properties.The layer constructor function.

The

forwardLossfunction. For more information, see Forward Loss Function.The optional

backwardLossfunction. For more information, see Backward Loss Function.

Classification Output Layer Template

This template outlines the structure of a classification output layer with a loss function.

classdef myClassificationLayer < nnet.layer.ClassificationLayer % ... % & nnet.layer.Acceleratable % (Optional) properties % (Optional) Layer properties. % Layer properties go here. end methods function layer = myClassificationLayer() % (Optional) Create a myClassificationLayer. % Layer constructor function goes here. end function loss = forwardLoss(layer,Y,T) % Return the loss between the predictions Y and the training % targets T. % % Inputs: % layer - Output layer % Y – Predictions made by network % T – Training targets % % Output: % loss - Loss between Y and T % Layer forward loss function goes here. end function dLdY = backwardLoss(layer,Y,T) % (Optional) Backward propagate the derivative of the loss % function. % % Inputs: % layer - Output layer % Y – Predictions made by network % T – Training targets % % Output: % dLdY - Derivative of the loss with respect to the % predictions Y % Layer backward loss function goes here. end end end

Regression Output Layer Template

This template outlines the structure of a regression output layer with a loss function.

classdef myRegressionLayer < nnet.layer.RegressionLayer % ... % & nnet.layer.Acceleratable % (Optional) properties % (Optional) Layer properties. % Layer properties go here. end methods function layer = myRegressionLayer() % (Optional) Create a myRegressionLayer. % Layer constructor function goes here. end function loss = forwardLoss(layer,Y,T) % Return the loss between the predictions Y and the training % targets T. % % Inputs: % layer - Output layer % Y – Predictions made by network % T – Training targets % % Output: % loss - Loss between Y and T % Layer forward loss function goes here. end function dLdY = backwardLoss(layer,Y,T) % (Optional) Backward propagate the derivative of the loss % function. % % Inputs: % layer - Output layer % Y – Predictions made by network % T – Training targets % % Output: % dLdY - Derivative of the loss with respect to the % predictions Y % Layer backward loss function goes here. end end end

Custom Layer Acceleration

If you do not specify a backward function when you define a custom layer, then the software automatically determines the gradients using automatic differentiation.

When you train a network with a custom layer without a backward function, the

software traces each input dlarray object of the custom layer

forward function to determine the computation graph used for automatic

differentiation. This tracing process can take some time and can end up recomputing

the same trace. By optimizing, caching, and reusing the traces, you can speed up

gradient computation when training a network. The software can also reuse these

traces to speed up network predictions after training.

The trace depends on the size, format, and underlying data type of the layer inputs. That is, the layer triggers a new trace for inputs with a size, format, or underlying data type not contained in the cache. Any inputs differing only by value to a previously cached trace do not trigger a new trace.

To indicate that the custom layer supports acceleration, also inherit from the

nnet.layer.Acceleratable class when defining the custom

layer. When a custom layer inherits from

nnet.layer.Acceleratable, the software automatically caches

traces when passing data through a dlnetwork object.

For example, to indicate that the custom layer myLayer supports

acceleration, use this

syntax

classdef myLayer < nnet.layer.Layer & nnet.layer.Acceleratable ... end

Acceleration Considerations

Because of the nature of caching traces, not all functions support acceleration.

The caching process can cache values or code structures that you might expect to change or that depend on external factors. You must take care when accelerating custom layers that:

Generate random numbers.

Use

ifstatements andwhileloops with conditions that depend on the values ofdlarrayobjects.

Because the caching process requires extra computation, acceleration can lead to longer running code in some cases. This scenario can happen when the software spends time creating new caches that do not get reused often. For example, when you pass multiple mini-batches of different sequence lengths to the function, the software triggers a new trace for each unique sequence length.

When custom layer acceleration causes slowdown, you can disable acceleration

by removing the Acceleratable class or by disabling

acceleration of the dlnetwork object functions predict and

forward by setting the

Acceleration option to "none".

For more information about enabling acceleration support for custom layers, see Custom Layer Function Acceleration.

Output Layer Properties

Declare the layer properties in the properties section of the class

definition.

By default, custom output layers have the following properties:

Name— Layer name, specified as a character vector or a string scalar. ForLayerarray input, thetrainnetanddlnetworkfunctions automatically assign names to unnamed layers.Description— One-line description of the layer, specified as a character vector or a string scalar. This description appears when the layer is displayed in aLayerarray. If you do not specify a layer description, then the software displays"Classification Output"or"Regression Output".Type— Type of the layer, specified as a character vector or a string scalar. The value ofTypeappears when the layer is displayed in aLayerarray. If you do not specify a layer type, then the software displays the layer class name.

Custom classification layers also have the following property:

Classes— Classes of the output layer, specified as a categorical vector, string array, cell array of character vectors, or"auto". IfClassesis"auto", then the software automatically sets the classes at training time. If you specify the string array or cell array of character vectorsstr, then the software sets the classes of the output layer tocategorical(str,str).

Custom regression layers also have the following property:

ResponseNames— Names of the responses, specified a cell array of character vectors or a string array. At training time, the software automatically sets the response names according to the training data. The default is{}.

If the layer has no other properties, then you can omit the properties

section.

Forward Loss Function

The output layer computes the loss L between predictions and

targets using the forward loss function and computes the derivatives of the loss with

respect to the predictions using the backward loss function.

The syntax for forwardLoss is loss

= forwardLoss(layer,Y,T). The input Y corresponds to the

predictions made by the network. These predictions are the output of the previous layer. The

input T corresponds to the training targets. The output

loss is the loss between Y and T

according to the specified loss function. The output loss must be

scalar.

Backward Loss Function

The backward loss function computes the derivatives of the loss with respect to the

predictions. If the layer forward loss function supports dlarray

objects, then the software automatically determines the backward loss function using

automatic differentiation. The derivatives must be real-valued. For a list of functions

that support dlarray objects, see List of Functions with dlarray Support. Alternatively, to

define a custom backward loss function, create a function named

backwardLoss.

The syntax for backwardLoss is dLdY

= backwardLoss(layer,Y,T). The input Y contains the predictions

made by the network and T contains the training targets. The output

dLdY is the derivative of the loss with respect to the predictions

Y. The output dLdY must be the same size as the layer

input Y.

For classification problems, the dimensions of T depend on the type of

problem.

| Classification Task | Example | |

|---|---|---|

| Shape | Data Format | |

| 2-D image classification | 1-by-1-by-K-by-N, where K is the number of classes and N is the number of observations | "SSCB" |

| 3-D image classification | 1-by-1-by-1-by-K-by-N, where K is the number of classes and N is the number of observations | "SSSCB" |

| Sequence-to-label classification | K-by-N, where K is the number of classes and N is the number of observations | "CB" |

| Sequence-to-sequence classification | K-by-N-by-S, where K is the number of classes, N is the number of observations, and S is the sequence length | "CBT" |

The size of Y depends on the output of the previous layer. To ensure that

Y is the same size as T, you must include a layer

that outputs the correct size before the output layer. For example, to ensure that

Y is a 4-D array of prediction scores for K

classes, you can include a fully connected layer of size K followed by a

softmax layer before the output layer.

For regression problems, the dimensions of T also depend on the type of

problem.

| Regression Task | Example | |

|---|---|---|

| Shape | Data Format | |

| 2-D image regression | 1-by-1-by-R-by-N, where R is the number of responses and N is the number of observations | "SSCB" |

| 2-D Image-to-image regression | h-by-w-by-c-by-N, where h, w, and c are the height, width, and number of channels of the output, respectively, and N is the number of observations | "SSCB" |

| 3-D image regression | 1-by-1-by-1-by-R-by-N, where R is the number of responses and N is the number of observations | "SSSCB" |

| 3-D Image-to-image regression | h-by-w-by-d-by-c-by-N, where h, w, d, and c are the height, width, depth, and number of channels of the output, respectively, and N is the number of observations | "SSSCB" |

| Sequence-to-one regression | R-by-N, where R is the number of responses and N is the number of observations | "CB" |

| Sequence-to-sequence regression | R-by-S-by-N, where R is the number of responses, N is the number of observations, and S is the sequence length | "CBT" |

For example, if the network defines an image regression network with one response and has

mini-batches of size 50, then T is a 4-D array of size

1-by-1-by-1-by-50.

The size of Y depends on the output of the previous layer. To ensure

that Y is the same size as T, you must include a layer

that outputs the correct size before the output layer. For example, for image regression

with R responses, to ensure that Y is a 4-D array of

the correct size, you can include a fully connected layer of size R

before the output layer.

The forwardLoss and backwardLoss functions have

the following output arguments.

| Function | Output Argument | Description |

|---|---|---|

forwardLoss | loss | Calculated loss between the predictions Y and the

true target T. |

backwardLoss | dLdY | Derivative of the loss with respect to the predictions

Y. |

The backwardLoss function must output dLdY with

the size expected by the previous layer and dLdY must be the same

size as Y.

GPU Compatibility

If the layer forward functions fully support dlarray objects, then the layer is GPU compatible. Otherwise, to be GPU compatible, the layer functions must support inputs and return outputs of type gpuArray (Parallel Computing Toolbox).

Many MATLAB® built-in functions support gpuArray (Parallel Computing Toolbox) and dlarray input arguments. For a list of functions that support dlarray objects, see List of Functions with dlarray Support. For a list of functions that execute on a GPU, see Run MATLAB Functions on a GPU (Parallel Computing Toolbox). To use a GPU for deep learning, you must also have a supported GPU device. For information on supported devices, see GPU Computing Requirements (Parallel Computing Toolbox). For more information on working with GPUs in MATLAB, see GPU Computing in MATLAB (Parallel Computing Toolbox).

Check Validity of Layer

If you create a custom deep learning layer, then you can use the checkLayer

function to check that the layer is valid. The function checks layers for validity, GPU

compatibility, correctly defined gradients, and code generation compatibility. To check

that a layer is valid, run the following

command:

checkLayer(layer,layout)

layer

is an instance of the layer and layout is a networkDataLayout object specifying the valid sizes and data formats for

inputs to the layer. To check with multiple observations, use the ObservationDimension option. To run the check for code generation

compatibility, set the CheckCodegenCompatibility option to 1

(true). For large input sizes, the gradient checks take longer to

run. To speed up the check, specify a smaller valid input size.For more information, see Check Custom Layer Validity.

See Also

trainnet | trainingOptions | dlnetwork | checkLayer | findPlaceholderLayers | replaceLayer | PlaceholderLayer