dlnetwork

Rete neurale di Deep Learning

Descrizione

Un oggetto dlnetwork specifica un'architettura di rete neurale di Deep Learning.

Suggerimento

Per la maggior parte delle attività di Deep Learning, è possibile utilizzare una rete neurale preaddestrata e adattarla ai propri dati. Per un esempio su come utilizzare il transfer learning per riaddestrare una rete neurale convoluzionale per classificare un nuovo set di immagini, vedere Retrain Neural Network to Classify New Images. In alternativa, è possibile creare e addestrare reti neurali da zero utilizzando le funzioni trainnet e trainingOptions.

Se la funzione trainingOptions non fornisce le opzioni di addestramento necessarie per l’attività, è possibile creare un loop di addestramento personalizzato usando la differenziazione automatica. Per saperne di più, vedere Train Network Using Custom Training Loop.

Se la funzione trainnet non fornisce la funzione di perdita necessaria per l'attività, è possibile specificare una funzione di perdita personalizzata su trainnet come handle della funzione. Per le funzioni di perdita che richiedono più input rispetto alle previsioni e ai target (ad esempio, le funzioni di perdita che richiedono l'accesso alla rete neurale o ulteriori input), addestrare il modello utilizzando un loop di addestramento personalizzato. Per saperne di più, vedere Train Network Using Custom Training Loop.

Se Deep Learning Toolbox™ non fornisce i livelli necessari per l’attività, è possibile creare un livello personalizzato. Per saperne di più, vedere Define Custom Deep Learning Layers. Per i modelli che non possono essere specificati come reti di livelli, è possibile definire il modello come funzione. Per saperne di più, vedere Train Network Using Model Function.

Per ulteriori informazioni sul metodo di addestramento da utilizzare per un determinato compito, vedere Train Deep Learning Model in MATLAB.

Creazione

Sintassi

Descrizione

Rete vuota

net = dlnetworkdlnetwork senza livelli. Utilizzare questa sintassi per creare una rete neurale da zero. (da R2024a)

Rete con livelli di input

net = dlnetwork(layers)layers per determinare la grandezza e il formato dei parametri apprendibili e di stato della rete neurale.

Utilizzare questa sintassi quando layers definisce una rete neurale completa a input singolo, con livelli disposti in serie e con un livello di input.

net = dlnetwork(layers,OutputNames=names)OutputNames. La proprietà OutputNames specifica i livelli o gli output dei livelli che corrispondono agli output della rete.

Utilizzare questa sintassi quando layers definisce una rete neurale completa a input singolo e output multiplo, con livelli disposti in serie e con un livello di input.

net = dlnetwork(layers,Initialize=tf)tf è 1, (true), questa sintassi è equivalente a net = dlnetwork(layers). Quando tf è 0 (false), questa sintassi è equivalente alla creazione di una rete vuota a cui poi aggiungere layers utilizzando la funzione addLayers.

Rete con input non connessi

net = dlnetwork(layers,X1,...,XN)X1,...,XN per determinare la grandezza e il formato dei parametri apprendibili e dei valori di stato della rete neurale, dove N è il numero di input della rete.

Utilizzare questa sintassi quando layers definisce una rete neurale completa con livelli disposti in serie e con input non connessi ai livelli di input.

net = dlnetwork(layers,X1,...,XN,OutputNames=names)OutputNames.

Utilizzare questa sintassi quando layers definisce una rete neurale completa con più output, livelli disposti in serie e input non connessi ai livelli di input.

Conversione

net = dlnetwork(prunableNet) TaylorPrunableNetwork in un oggetto dlnetwork rimuovendo i filtri selezionati per lo sfoltimento dai livelli convoluzionali di prunableNet e restituisce un oggetto dlnetwork compresso che presenta un minor numero di parametri apprendibili ed è di grandezza inferiore.

net = dlnetwork(mdl)dlnetwork.

Argomenti di input

Proprietà

Funzioni oggetto

addInputLayer | Add input layer to network |

addLayers | Add layers to neural network |

removeLayers | Remove layers from neural network |

connectLayers | Connect layers in neural network |

disconnectLayers | Disconnect layers in neural network |

replaceLayer | Replace layer in neural network |

getLayer | Look up a layer by name or path |

expandLayers | Expand network layers |

groupLayers | Group layers into network layers |

summary | Stampare il riepilogo della rete |

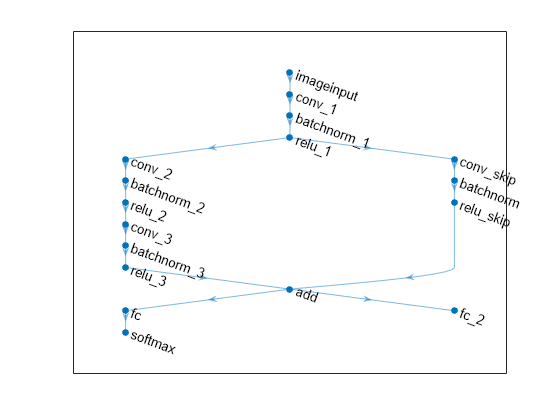

plot | Tracciare l'architettura della rete neurale |

initialize | Initialize learnable and state parameters of neural network |

predict | Compute deep learning network output for inference |

forward | Compute deep learning network output for training |

resetState | Reset state parameters of neural network |

setL2Factor | Set L2 regularization factor of layer learnable parameter |

setLearnRateFactor | Set learn rate factor of layer learnable parameter |

getLearnRateFactor | Get learn rate factor of layer learnable parameter |

getL2Factor | Get L2 regularization factor of layer learnable parameter |

Esempi

Funzionalità estese

Cronologia versioni

Introdotto in R2019bVedi anche

trainnet | trainingOptions | dlarray | dlgradient | dlfeval | forward | predict | initialize | TaylorPrunableNetwork