Collect Model and Testing Metrics

Assess the quality and compliance of your models by using:

Model metrics to check your model and determine if it complies with industry and custom modeling guidelines.

Model design metrics to analyze the size, architecture, and complexity of the MATLAB®, Simulink®, and Stateflow® artifacts in your project.

Model and code testing metrics to assess the traceability and completeness of the models, requirements, tests, and test results in your project.

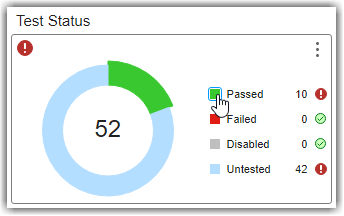

Use the dashboards associated with the metrics to visualize the metric data. As you develop and test your models, use the dashboards to monitor your progress and address compliance issues. For more information, see Explore Metric Results, Monitor Progress, and Identify Issues.

Categories

- Model Metrics

Collect model compliance metric data and create custom model metrics

- Model Design Metrics

Collect metrics for the size, architecture, and complexity of models and other design artifacts

- Model and Code Testing Metrics

Collect metrics for model testing, including requirements-based testing, and code testing