Acoustics-Based Machine Fault Recognition

In this example, you develop a deep learning model to detect faults in an air compressor using acoustic measurements. After developing the model, you package the system so that you can recognize faults based on streaming input data.

Data Preparation

Download and unzip the air compressor data set [1]. This data set consists of recordings from air compressors in a healthy state or one of seven faulty states.

rng default downloadFolder = matlab.internal.examples.downloadSupportFile("audio","AirCompressorDataset/AirCompressorDataset.zip"); dataFolder = tempdir; unzip(downloadFolder,dataFolder) dataset = fullfile(dataFolder,"AirCompressorDataset");

Create an audioDatastore object to manage the data and split it into training and validation sets.

ads = audioDatastore(dataset, ... IncludeSubfolders=true, ... OutputDataType="single");

You can reduce the training data set used in this example to speed up the runtime at the cost of performance. In general, reducing the data set is a good practice for development and debugging.

speedupExample =false; if speedupExample lbls = folders2labels(ads.Files); idxs = splitlabels(lbls,0.2); ads = subset(ads,idxs{1}); end

The data labels are encoded in their containing folder name. To split the data into train and test sets, use folders2labels and splitlabels.

lbls = folders2labels(ads.Files);

idxs = splitlabels(lbls,0.9);

adsTrain = subset(ads,idxs{1});

labelsTrain = lbls(idxs{1});

adsValidation = subset(ads,idxs{2});

labelsValidation = lbls(idxs{2});Call countlabels to inspect the distribution of labels in the train and validation sets.

countlabels(labelsTrain)

ans=8×3 table

Label Count Percent

_________ _____ _______

Bearing 203 12.5

Flywheel 203 12.5

Healthy 203 12.5

LIV 203 12.5

LOV 203 12.5

NRV 203 12.5

Piston 203 12.5

Riderbelt 203 12.5

countlabels(labelsValidation)

ans=8×3 table

Label Count Percent

_________ _____ _______

Bearing 22 12.5

Flywheel 22 12.5

Healthy 22 12.5

LIV 22 12.5

LOV 22 12.5

NRV 22 12.5

Piston 22 12.5

Riderbelt 22 12.5

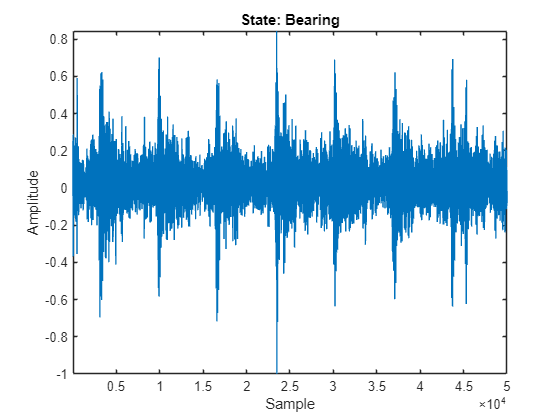

The data consists of time-series recordings of acoustics from faulty or healthy air compressors. As such, there are strong relationships between samples in time. Listen to a recording and plot the waveform.

[sampleData,sampleDataInfo] = read(adsTrain); fs = sampleDataInfo.SampleRate; soundsc(gather(sampleData),fs) plot(sampleData) xlabel("Sample") ylabel("Amplitude") title("State: " + string(labelsTrain(1))) axis tight

Because the samples are related in time, you can use a recurrent neural network (RNN) to model the data. A long short-term memory (LSTM) network is a popular choice of RNN because it is designed to avoid vanishing and exploding gradients. Before you can train the network, it's important to prepare the data adequately. Often, it is best to transform or extract features from 1-dimensional signal data in order to provide a richer set of features for the model to learn from.

Feature Engineering

The next step is to extract a set of acoustic features used as inputs to the network. Audio Toolbox™ enables you to extract spectral descriptors that are commonly used as inputs in machine learning tasks. You can extract the features using individual functions, or you can use audioFeatureExtractor to simplify the workflow and do it all at once. After feature extraction, orient time along rows which is the expected format for sequences in Deep Learning Toolbox™.

windowLength = 512; overlapLength = 0; afe = audioFeatureExtractor(SampleRate=fs, ... Window=hamming(windowLength,"periodic"),... OverlapLength=overlapLength,... spectralCentroid=true, ... spectralCrest=true, ... spectralDecrease=true, ... spectralEntropy=true, ... spectralFlatness=true, ... spectralFlux=false, ... spectralKurtosis=true, ... spectralRolloffPoint=true, ... spectralSkewness=true, ... spectralSlope=true, ... spectralSpread=true); tic trainFeatures = extract(afe,adsTrain); disp("Feature extraction of train set took " + toc + " seconds.");

Feature extraction of train set took 12.7965 seconds.

Data Augmentation

The training set contains a relatively small number of acoustic recordings for training a deep learning model. A popular method to enlarge the data set is to use mixup. In mixup, you augment your dataset by mixing the features and labels from two different class instances. Mixup was reformulated by [2] as labels drawn from a probability distribution instead of mixed labels. The supporting function, mixup, takes the training features, associated labels, and the number of mixes per observation and then outputs the mixes and associated labels.

numMixesPerInstance =2; tic [augData,augLabels] = mixup(trainFeatures,labelsTrain,numMixesPerInstance); trainLabels = cat(1,labelsTrain,augLabels); trainFeatures = cat(1,trainFeatures,augData); disp("Feature augmentation of train set took " + toc + " seconds.");

Feature augmentation of train set took 0.11583 seconds.

Generate Validation Features

Repeat the feature extraction for the validation features.

tic featuresValidation = extract(afe,adsValidation); disp("Feature extraction of validation set took " + toc + " seconds.");

Feature extraction of validation set took 1.3533 seconds.

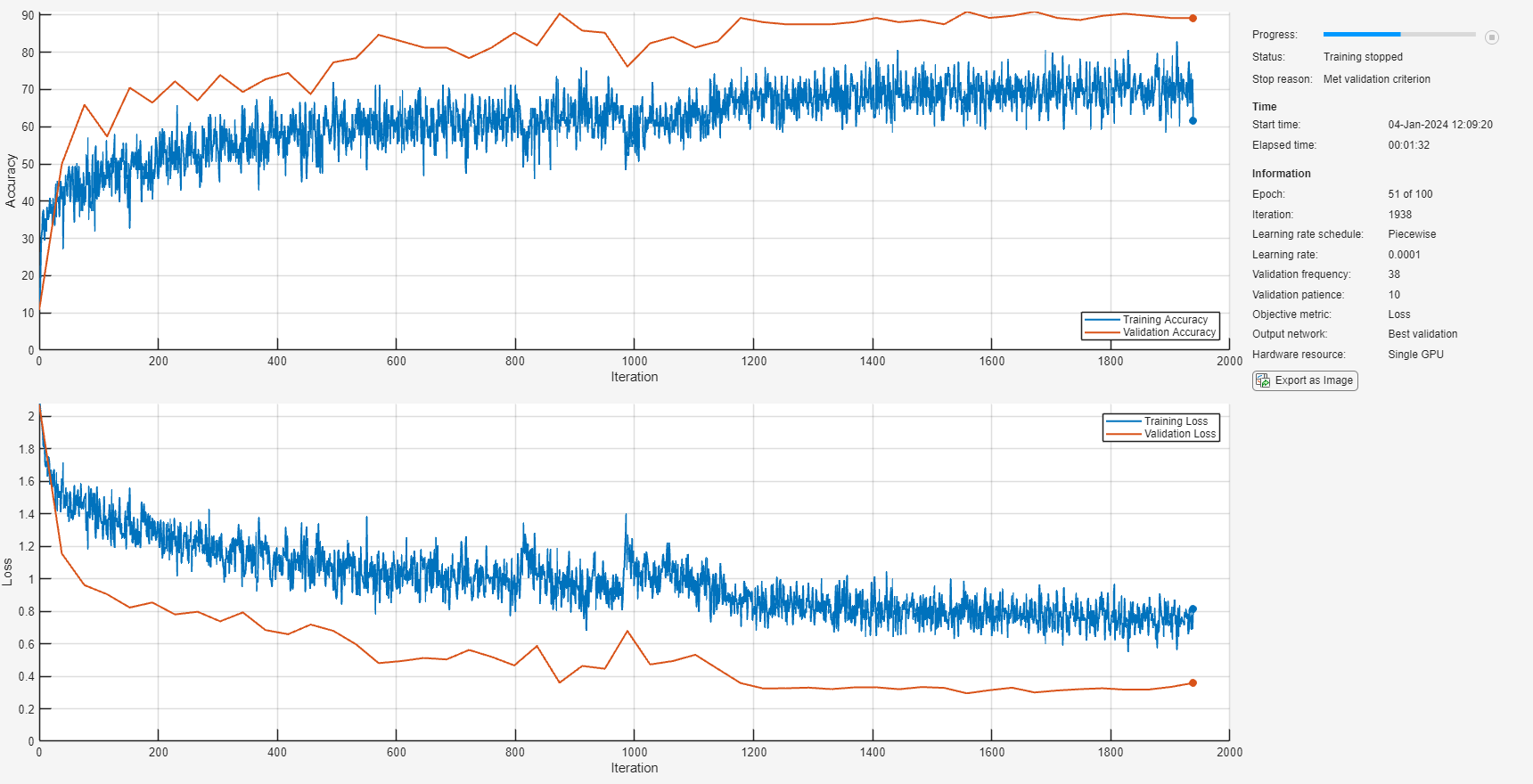

Train Model

Next, you define and train a network. The pretrained model is also placed in the current folder when you open this example. To skip training the network, simply continue to the next section.

Define Network

An LSTM layer learns long-term dependencies between time steps of time series or sequence data. The first lstmLayer outputs sequence data. Then a dropout layer is used to reduce overfitting. The second lstmLayer outputs the last step of the time sequence.

numHiddenUnits =100; dropProb =

0.2; layers = [ ... sequenceInputLayer(afe.FeatureVectorLength,Normalization="zscore") lstmLayer(numHiddenUnits,OutputMode="sequence") dropoutLayer(dropProb) lstmLayer(numHiddenUnits,OutputMode="last") fullyConnectedLayer(numel(unique(labelsTrain))) softmaxLayer];

Define Network Hyperparameters

To define hyperparameters for the network, use trainingOptions.

miniBatchSize =128; validationFrequency = floor(numel(trainFeatures)/miniBatchSize); options = trainingOptions("adam", ... Metric="accuracy", ... MiniBatchSize=miniBatchSize, ... MaxEpochs=100, ... Plots="training-progress", ... Verbose=false, ... Shuffle="every-epoch", ... LearnRateSchedule="piecewise", ... LearnRateDropPeriod=30, ... LearnRateDropFactor=0.1, ... ValidationData={featuresValidation,labelsValidation}, ... ValidationFrequency=validationFrequency, ... ValidationPatience=10, ... OutputNetwork="best-validation-loss");

Train Network

To train the network, use trainnet.

airCompNet = trainnet(trainFeatures,trainLabels,layers,"crossentropy",options);

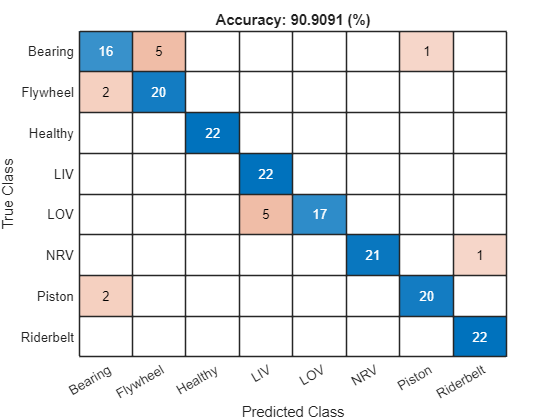

Evaluate Network

View the confusion chart for the validation data.

validationResults = minibatchpredict(airCompNet,featuresValidation); uniqueLabels = unique(labelsTrain); validationResults = scores2label(validationResults,uniqueLabels,2); confusionchart(labelsValidation,validationResults, ... Title="Accuracy: " + mean(validationResults == labelsValidation)*100 + " (%)");

Model Streaming Detection

Create Functions to Process Data in a Streaming Loop

Once you have a trained network with satisfactory performance, you can apply the network to test data in a streaming fashion.

There are many additional considerations to take into account to make the system work in a real-world embedded system.

For example,

The rate or interval at which classification can be performed with accurate results

The size of the network in terms of generated code (program memory) and weights (data memory)

The efficiency of the network in terms of computation speed

In MATLAB, you can mimic how the network is deployed and used in hardware on a real embedded system and begin to answer these important questions.

Create MATLAB Function Compatible with C/C++ Code Generation

Once you train your deep learning model, you will deploy it to an embedded target. That means you also need to deploy the code used to perform the feature extraction. Use the generateMATLABFunction method of audioFeatureExtractor to generate a MATLAB function compatible with C/C++ code generation. Specify IsStreaming as true so that the generated function is optimized for stream processing.

filename = fullfile(pwd,"extractAudioFeatures");

generateMATLABFunction(afe,filename,IsStreaming=true);Combine Streaming Feature Extraction and Classification

Save the trained network as a MAT file.

labels = uniqueLabels; save("AirCompressorFaultRecognitionModel.mat","airCompNet","labels")

Create a function that combines the feature extraction and deep learning classification.

type recognizeAirCompressorFault.mfunction scores = recognizeAirCompressorFault(audioIn,rs)

% This is a streaming classifier function

persistent airCompNet

if isempty(airCompNet)

airCompNet = coder.loadDeepLearningNetwork('AirCompressorFaultRecognitionModel.mat');

end

if rs

airCompNet = resetState(airCompNet);

end

% Extract features using function

features = extractAudioFeatures(audioIn);

% Classify

if isa(airCompNet,'dlnetwork')

[scores,state] = predict(airCompNet,dlarray(features,"CT"));

airCompNet.State = state;

else

[airCompNet,scores] = predictAndUpdateState(airCompNet,features);

end

end

function featureVector = extractAudioFeatures(x)

%extractAudioFeatures Extract multiple features from streaming audio

% featureVector = extractAudioFeatures(audioIn) returns audio features

% extracted from audioIn.

%

% Parameters of the audioFeatureExtractor used to generated this

% function must be honored when calling this function.

% - Sample rate of the input should be 16000 Hz.

% - Frame length of the input should be 512 samples.

% - Successive frames of the input should be overlapped by

% 0 samples before calling extractAudioFeatures.

%

%

% % Example 1: Extract features

% source = dsp.ColoredNoise();

% inputBuffer = dsp.AsyncBuffer;

% for ii = 1:10

% audioIn = source();

% write(inputBuffer,audioIn);

% while inputBuffer.NumUnreadSamples > 512

% x = read(inputBuffer,512,0);

% featureVector = extractAudioFeatures(x);

% % ... do something with featureVector ...

% end

% end

%

%

% % Example 2: Generate code

% targetDataType = "single";

% codegen extractAudioFeatures -args {ones(512,1,targetDataType)}

% source = dsp.ColoredNoise(OutputDataType=targetDataType);

% inputBuffer = dsp.AsyncBuffer;

% for ii = 1:10

% audioIn = source();

% write(inputBuffer,audioIn);

% while inputBuffer.NumUnreadSamples > 512

% x = read(inputBuffer,512,0);

% featureVector = extractAudioFeatures_mex(x);

% % ... do something with featureVector ...

% end

% end

%

% See also audioFeatureExtractor, dsp.AsyncBuffer, codegen.

% Generated by audioFeatureExtractor on 14-Dec-2023 11:37:58 UTC-05:00

%#codegen

dataType = underlyingType(x);

numChannels = size(x,2);

props = coder.const(getProps(dataType));

persistent config outputIndex

if isempty(outputIndex)

[config, outputIndex] = coder.const(@getConfig,dataType,props);

end

% Preallocate feature vector

featureVector = coder.nullcopy(zeros(props.NumFeatures,numChannels,dataType));

% Fourier transform

Y = fft(bsxfun(@times,x,props.Window),props.FFTLength);

Z = Y(config.OneSidedSpectrumBins,:);

Zpower = real(Z.*conj(Z));

% Linear spectrum

linearSpectrum = Zpower(config.linearSpectrum.FrequencyBins,:)*config.linearSpectrum.NormalizationFactor;

linearSpectrum([1,end],:) = 0.5*linearSpectrum([1,end],:);

linearSpectrum = reshape(linearSpectrum,[],1,numChannels);

% Spectral descriptors

[featureVector(outputIndex.spectralKurtosis,:),featureVector(outputIndex.spectralSpread,:),featureVector(outputIndex.spectralCentroid,:)] = spectralKurtosis(linearSpectrum,config.SpectralDescriptorInput.FrequencyVector);

featureVector(outputIndex.spectralSkewness,:) = spectralSkewness(linearSpectrum,config.SpectralDescriptorInput.FrequencyVector);

featureVector(outputIndex.spectralCrest,:) = spectralCrest(linearSpectrum,config.SpectralDescriptorInput.FrequencyVector);

featureVector(outputIndex.spectralDecrease,:) = spectralDecrease(linearSpectrum,config.SpectralDescriptorInput.FrequencyVector);

featureVector(outputIndex.spectralEntropy,:) = spectralEntropy(linearSpectrum,config.SpectralDescriptorInput.FrequencyVector);

featureVector(outputIndex.spectralFlatness,:) = spectralFlatness(linearSpectrum,config.SpectralDescriptorInput.FrequencyVector);

featureVector(outputIndex.spectralRolloffPoint,:) = spectralRolloffPoint(linearSpectrum,config.SpectralDescriptorInput.FrequencyVector,Threshold=9.500000e-01);

featureVector(outputIndex.spectralSlope,:) = spectralSlope(linearSpectrum,config.SpectralDescriptorInput.FrequencyVector);

end

function props = getProps(dataType)

props.Window = cast([0.080000000000000015543122344752192;0.080034637153993559710585259381332;0.080138543399746076101308744910057;0.080311703089359198770580405835062;0.080554090145620704799256373007665;0.080865668065931373131860482317279;0.081246389927802531438771893590456;0.081696198395922126067603130650241;0.08221502573078942610607100505149;0.082802793798916252132613635694725;0.083459414084593452898275245388504;0.084184787703221186649926721656811;0.084978805416200731137621460220544;0.085841347647385157770827390777413;0.086772284501087038055544553571963;0.087771475781640517777049126380007;0.088838771014514039681131407633075;0.08997400946897127216317358033848;0.091177020182276857784842150067561;0.092447621985442318681691631354624;0.093785623530509787393327769677853;0.095190823319368067512158404497313;0.096663009734097693481658097880427;0.098201961068839771495930790479179;0.099807445563183938563867059201584;0.10147922143707083231234378217778;0.10321703692720313183528446643322;0.10502063032496022909612065632246;0.10688973001581042465701898436237;0.10882405452021554070896058874496;0.11082331253602012433034929017595;0.11288720298232085559320125867089;0.11501541504480811139998763792391;0.11720762822257346780219222637243;0.11946351237637592435092415144027;0.12178272777835974505222793595749;0.12416492516321608885476734940312;0.12660974578078126873137421171123;0.1291168214500629796148700734193;0.13168577461468722322734947738354;0.13431621839975671495182041326188;0.13700775667011300118502958866884;0.13975998408999273925346074065601;0.14257248618407014628672868639114;0.14544483939987484788503024901729;0.14837661117157802115329445769021;0.15136735998513473067106360758771;0.15441663544477451930347911002173;0.15752397834082920713427711234544;0.16068892071888840611393334256718;0.16391098595027153717040846458985;0.16718968880380641328642354892509;0.17052453551890334182417063857429;0.17391502387991358835606092725357;0.177360643291761099771264298397;0.18086087485683655096124766714638;0.18441519145314100303068016728503;0.18802305781366890347072740041767;0.19168393060701710561843924551795;0.19539725851920869414968251476239;0.19916248233671879352968403509294;0.202979035030690146967913278786;0.20684634184232519871216027240735;0.2107638203694434664292600700719;0.21473088065418816094975795749633;0.21874692527187250545850361049816;0.22281134942094921180810729310906;0.22692354101409156763580199367425;0.23108288077037153485093767812941;0.23528874230852098170529984599852;0.23954049224126267025880565597618;0.24383749027069595571859395022329;0.24817908928472309781909643788822;0.25256463545450225094413099213853;0.25699346833291170089097477102769;0.26146492095401024924328226006764;0.26597831993348064472115765966009;0.27053298557003813140653392110835;0.27512823194779117974562154813611;0.27976336703953791351651148033852;0.284437692810982967195343462663;0.28915050532585867548718283615017;0.29390109485193527394386592277442;0.29868874596790440101301555841928;0.30351273767111808243868154022493;0.30837234348616993084846171768731;0.31326683157429935366877771230065;0.31819546484360350380171666984097;0.32315750106004104136303567429422;0.32815219295920960984602743337746;0.33317878835888098398854140214098;0.33823653027227729150894219856127;0.343324657022070212075703921073;0.34844240235508683323217837823904;0.35358899555770473277505061560078;0.35876366157191791339542419336794;0.36396562111205871259400623785041;0.36919409078215470465522685117321;0.37444828319390544013600674588815;0.3797274070852601490777544768207;0.38503066743957881090665296142106;0.39035726560535904949844621114607;0.39570639941650986859400518369512;0.40107726331315507461994229743141;0.4064690484629473465894022865541;0.41188094288287563360384524457913;0.41731213156154678411979830343626;0.42276179658192331034527455813077;0.42822911724449858050789430308214;0.4337132701908912313371047275723;0.4392134295278399269690794426424;0.44472876695157947946057674926124;0.45025845187258101143257249532326;0.45580165154063512211735087475972;0.46135753117026140346368379141495;0.46692525406642382268529445354943;0.47250398175053365257269888388691;0.47809287408672196395542641766951;0.48369108940836053056600007948873;0.48929778464481377131534145519254;0.49491211544840207903206419359776;0.50053323632155766187423751034657;0.50616030074415296891032767234719;0.51179246130098410283437715406762;0.51742886980938773699989496890339;0.52306867744697493893824002952897;0.52871103487946036203481980919605;0.53435509238856881975010537644266;0.54000000000000003552713678800501;0.5456449076114311402818657370517;0.55128896512053959799715130429831;0.55693132255302513211603354648105;0.56257113019061233405437860710663;0.56820753869901596821989642194239;0.57383969925584699112164344114717;0.57946676367844229815773360314779;0.5850878845515978809999069198966;0.59070221535518618871662965830183;0.59630891059163948497712226526346;0.60190712591327799607654469582485;0.6074960182494664184815746921231;0.61307474593357624836897912246059;0.61864246882973861207943855333724;0.62419834845936483791462023873464;0.62974154812741900411054984942893;0.63527123304842048057139436423313;0.64078657047216003306289167085197;0.64628672980910872869486638592207;0.65177088275550143503522804167005;0.65723820341807670519784778662142;0.66268786843845328693447527257376;0.66811905711712438193927710017306;0.67353095153705266895372005819809;0.67892273668684499643433127857861;0.68429360058349009143796592979925;0.68964273439464096604467613360612;0.69496933256042114912531815207331;0.70027259291473986646536786793149;0.70555171680609463091826683012187;0.71080590921784525537674426232115;0.71603437888794130294911610690178;0.72123633842808210214769815138425;0.72641100444229533827922296040924;0.73155759764491301577749027273967;0.73667534297792969244511596116354;0.74176346972772266852302891493309;0.74682121164111914257688340512686;0.75184780704079046120824614263256;0.75684249893995891866893543920014;0.76180453515639645623025444365339;0.76673316842570071738549586370937;0.77162765651383002918350939580705;0.77648726232888198861559203578508;0.78131125403209567004125801759074;0.78609890514806468608810519071994;0.79084949467414134005593950860202;0.79556230718901699283662765083136;0.80023663296046221304891332692932;0.80487176805220883579750079661608;0.80946701442996193964773965490167;0.81402168006651942633311591634993;0.81853507904598976629984008468455;0.82300653166708825914099634246668;0.82743536454549770908784012135584;0.8318209107152769732351771381218;0.8361625097293040598245283945289;0.84045950775873723426201422626036;0.84471125769147914486012496126932;0.84891711922962853620333589788061;0.85307645898590844790732035107794;0.85718865057905091475731751415879;0.86125307472812751008461873425404;0.86526911934581185459336438725586;0.86923617963055654911386227468029;0.87315365815767476131981084108702;0.87702096496930992408636029722402;0.8808375176632811109911358471436;0.8846027414807913213934398299898;0.8883160693929830209469855617499;0.89197694218633116758354617559235;0.89558480854685906802359340872499;0.89913912514316352009302590886364;0.90263935670823891577185804635519;0.90608497612008642718706141749863;0.90947546448109672923010293743573;0.91281031119619360225669879582711;0.91608901404972853388386511142016;0.91931107928111166494034023344284;0.92247602165917097494229892618023;0.92558336455522560726194569724612;0.92863264001486534038320996842231;0.93162338882842199438982788706198;0.93455516060012522316924332699273;0.93742751381592992476754488961888;0.94024001591000727628966160409618;0.94299224332988695884694152482552;0.94568378160024324508015070023248;0.94831422538531273680462163611082;0.95088317854993698041710104007507;0.95339025421921885783405059555662;0.9558350748367839821995062266069;0.95821727222164032600204564005253;0.96053648762362420221450065582758;0.96279237177742660325208134963759;0.96498458495519190414313470682828;0.9671127970176791599499210860813;0.9691766874639800022350755170919;0.97117594547978447483416175600723;0.97311026998418959088610336038982;0.97497936967503973093585045717191;0.97678296307279688370783787831897;0.97852077856292929425308102509007;0.98019255443681618800155774806626;0.98179803893116024404719155427301;0.98333699026590237757261547812959;0.98480917668063194803096394025488;0.98621437646949028366094580633217;0.98755237801455764135027948213974;0.98882297981772315775828019468463;0.99002599053102868786879753315588;0.99116122898548608688429339963477;0.99222852421835960878837568088784;0.99322771549891308850988025369588;0.99415865235261491328344618523261;0.99502119458379933991665211578947;0.99581521229677893991549808561103;0.99654058591540661815599833062151;0.99719720620108387443281117157312;0.99778497426921064494820257095853;0.99830380160407794498667044535978;0.99875361007219753961550168241956;0.99913433193406864241126186243491;0.99944590985437931074386597174453;0.99968829691064087228369317017496;0.99986145660025393944181359984213;0.99996536284600656685483954788651;1;0.99996536284600656685483954788651;0.99986145660025393944181359984213;0.99968829691064087228369317017496;0.99944590985437931074386597174453;0.99913433193406864241126186243491;0.99875361007219753961550168241956;0.99830380160407794498667044535978;0.99778497426921064494820257095853;0.99719720620108387443281117157312;0.99654058591540661815599833062151;0.99581521229677893991549808561103;0.99502119458379933991665211578947;0.99415865235261491328344618523261;0.99322771549891308850988025369588;0.99222852421835960878837568088784;0.99116122898548608688429339963477;0.99002599053102868786879753315588;0.98882297981772315775828019468463;0.98755237801455764135027948213974;0.98621437646949028366094580633217;0.98480917668063194803096394025488;0.98333699026590237757261547812959;0.98179803893116024404719155427301;0.98019255443681618800155774806626;0.97852077856292929425308102509007;0.97678296307279688370783787831897;0.97497936967503973093585045717191;0.97311026998418959088610336038982;0.97117594547978447483416175600723;0.9691766874639800022350755170919;0.9671127970176791599499210860813;0.96498458495519190414313470682828;0.96279237177742660325208134963759;0.96053648762362420221450065582758;0.95821727222164032600204564005253;0.9558350748367839821995062266069;0.95339025421921885783405059555662;0.95088317854993698041710104007507;0.94831422538531273680462163611082;0.94568378160024324508015070023248;0.94299224332988695884694152482552;0.94024001591000727628966160409618;0.93742751381592992476754488961888;0.93455516060012522316924332699273;0.93162338882842199438982788706198;0.92863264001486534038320996842231;0.92558336455522560726194569724612;0.92247602165917097494229892618023;0.91931107928111166494034023344284;0.91608901404972853388386511142016;0.91281031119619360225669879582711;0.90947546448109672923010293743573;0.90608497612008642718706141749863;0.90263935670823891577185804635519;0.89913912514316352009302590886364;0.89558480854685906802359340872499;0.89197694218633116758354617559235;0.8883160693929830209469855617499;0.8846027414807913213934398299898;0.8808375176632811109911358471436;0.87702096496930992408636029722402;0.87315365815767476131981084108702;0.86923617963055654911386227468029;0.86526911934581185459336438725586;0.86125307472812751008461873425404;0.85718865057905091475731751415879;0.85307645898590844790732035107794;0.84891711922962853620333589788061;0.84471125769147914486012496126932;0.84045950775873723426201422626036;0.8361625097293040598245283945289;0.8318209107152769732351771381218;0.82743536454549770908784012135584;0.82300653166708825914099634246668;0.81853507904598976629984008468455;0.81402168006651942633311591634993;0.80946701442996193964773965490167;0.80487176805220883579750079661608;0.80023663296046221304891332692932;0.79556230718901699283662765083136;0.79084949467414134005593950860202;0.78609890514806468608810519071994;0.78131125403209567004125801759074;0.77648726232888198861559203578508;0.77162765651383002918350939580705;0.76673316842570071738549586370937;0.76180453515639645623025444365339;0.75684249893995891866893543920014;0.75184780704079046120824614263256;0.74682121164111914257688340512686;0.74176346972772266852302891493309;0.73667534297792969244511596116354;0.73155759764491301577749027273967;0.72641100444229533827922296040924;0.72123633842808210214769815138425;0.71603437888794130294911610690178;0.71080590921784525537674426232115;0.70555171680609463091826683012187;0.70027259291473986646536786793149;0.69496933256042114912531815207331;0.68964273439464096604467613360612;0.68429360058349009143796592979925;0.67892273668684499643433127857861;0.67353095153705266895372005819809;0.66811905711712438193927710017306;0.66268786843845328693447527257376;0.65723820341807670519784778662142;0.65177088275550143503522804167005;0.64628672980910872869486638592207;0.64078657047216003306289167085197;0.63527123304842048057139436423313;0.62974154812741900411054984942893;0.62419834845936483791462023873464;0.61864246882973861207943855333724;0.61307474593357624836897912246059;0.6074960182494664184815746921231;0.60190712591327799607654469582485;0.59630891059163948497712226526346;0.59070221535518618871662965830183;0.5850878845515978809999069198966;0.57946676367844229815773360314779;0.57383969925584699112164344114717;0.56820753869901596821989642194239;0.56257113019061233405437860710663;0.55693132255302513211603354648105;0.55128896512053959799715130429831;0.5456449076114311402818657370517;0.54000000000000003552713678800501;0.53435509238856881975010537644266;0.52871103487946036203481980919605;0.52306867744697493893824002952897;0.51742886980938773699989496890339;0.51179246130098410283437715406762;0.50616030074415296891032767234719;0.50053323632155766187423751034657;0.49491211544840207903206419359776;0.48929778464481377131534145519254;0.48369108940836053056600007948873;0.47809287408672196395542641766951;0.47250398175053365257269888388691;0.46692525406642382268529445354943;0.46135753117026140346368379141495;0.45580165154063512211735087475972;0.45025845187258101143257249532326;0.44472876695157947946057674926124;0.4392134295278399269690794426424;0.4337132701908912313371047275723;0.42822911724449858050789430308214;0.42276179658192331034527455813077;0.41731213156154678411979830343626;0.41188094288287563360384524457913;0.4064690484629473465894022865541;0.40107726331315507461994229743141;0.39570639941650986859400518369512;0.39035726560535904949844621114607;0.38503066743957881090665296142106;0.3797274070852601490777544768207;0.37444828319390544013600674588815;0.36919409078215470465522685117321;0.36396562111205871259400623785041;0.35876366157191791339542419336794;0.35358899555770473277505061560078;0.34844240235508683323217837823904;0.343324657022070212075703921073;0.33823653027227729150894219856127;0.33317878835888098398854140214098;0.32815219295920960984602743337746;0.32315750106004104136303567429422;0.31819546484360350380171666984097;0.31326683157429935366877771230065;0.30837234348616993084846171768731;0.30351273767111808243868154022493;0.29868874596790440101301555841928;0.29390109485193527394386592277442;0.28915050532585867548718283615017;0.284437692810982967195343462663;0.27976336703953791351651148033852;0.27512823194779117974562154813611;0.27053298557003813140653392110835;0.26597831993348064472115765966009;0.26146492095401024924328226006764;0.25699346833291170089097477102769;0.25256463545450225094413099213853;0.24817908928472309781909643788822;0.24383749027069595571859395022329;0.23954049224126267025880565597618;0.23528874230852098170529984599852;0.23108288077037153485093767812941;0.22692354101409156763580199367425;0.22281134942094921180810729310906;0.21874692527187250545850361049816;0.21473088065418816094975795749633;0.2107638203694434664292600700719;0.20684634184232519871216027240735;0.202979035030690146967913278786;0.19916248233671879352968403509294;0.19539725851920869414968251476239;0.19168393060701710561843924551795;0.18802305781366890347072740041767;0.18441519145314100303068016728503;0.18086087485683655096124766714638;0.177360643291761099771264298397;0.17391502387991358835606092725357;0.17052453551890334182417063857429;0.16718968880380641328642354892509;0.16391098595027153717040846458985;0.16068892071888840611393334256718;0.15752397834082920713427711234544;0.15441663544477451930347911002173;0.15136735998513473067106360758771;0.14837661117157802115329445769021;0.14544483939987484788503024901729;0.14257248618407014628672868639114;0.13975998408999273925346074065601;0.13700775667011300118502958866884;0.13431621839975671495182041326188;0.13168577461468722322734947738354;0.1291168214500629796148700734193;0.12660974578078126873137421171123;0.12416492516321608885476734940312;0.12178272777835974505222793595749;0.11946351237637592435092415144027;0.11720762822257346780219222637243;0.11501541504480811139998763792391;0.11288720298232085559320125867089;0.11082331253602012433034929017595;0.10882405452021554070896058874496;0.10688973001581042465701898436237;0.10502063032496022909612065632246;0.10321703692720313183528446643322;0.10147922143707083231234378217778;0.099807445563183938563867059201584;0.098201961068839771495930790479179;0.096663009734097693481658097880427;0.095190823319368067512158404497313;0.093785623530509787393327769677853;0.092447621985442318681691631354624;0.091177020182276857784842150067561;0.08997400946897127216317358033848;0.088838771014514039681131407633075;0.087771475781640517777049126380007;0.086772284501087038055544553571963;0.085841347647385157770827390777413;0.084978805416200731137621460220544;0.084184787703221186649926721656811;0.083459414084593452898275245388504;0.082802793798916252132613635694725;0.08221502573078942610607100505149;0.081696198395922126067603130650241;0.081246389927802531438771893590456;0.080865668065931373131860482317279;0.080554090145620704799256373007665;0.080311703089359198770580405835062;0.080138543399746076101308744910057;0.080034637153993559710585259381332],dataType);props.SampleRate = cast(16000,dataType);

props.FFTLength = uint16(512);

props.NumFeatures = uint8(10);

end

function [config, outputIndex] = getConfig(dataType, props)

powerNormalizationFactor = 1/(sum(props.Window)^2);

config.OneSidedSpectrumBins = uint16(1:257);

linearSpectrumFrequencyBins = 1:257;

config.linearSpectrum.FrequencyBins = uint16(linearSpectrumFrequencyBins);

config.linearSpectrum.NormalizationFactor = cast(2*powerNormalizationFactor,dataType);

FFTLength = cast(props.FFTLength,like=props.SampleRate);

w = (props.SampleRate/FFTLength)*(linearSpectrumFrequencyBins-1);

config.SpectralDescriptorInput.FrequencyVector = cast(w(:),dataType);

outputIndex.spectralCentroid = uint8(1);

outputIndex.spectralCrest = uint8(2);

outputIndex.spectralDecrease = uint8(3);

outputIndex.spectralEntropy = uint8(4);

outputIndex.spectralFlatness = uint8(5);

outputIndex.spectralKurtosis = uint8(6);

outputIndex.spectralRolloffPoint = uint8(7);

outputIndex.spectralSkewness = uint8(8);

outputIndex.spectralSlope = uint8(9);

outputIndex.spectralSpread = uint8(10);

end

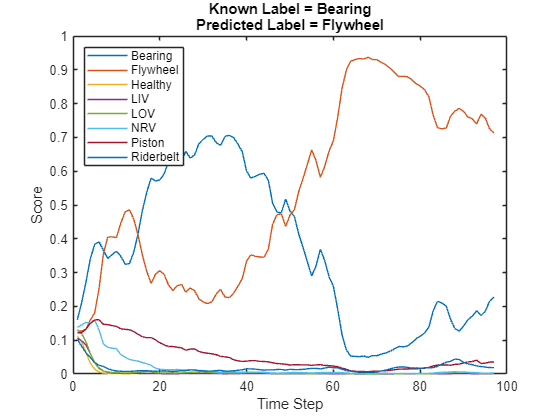

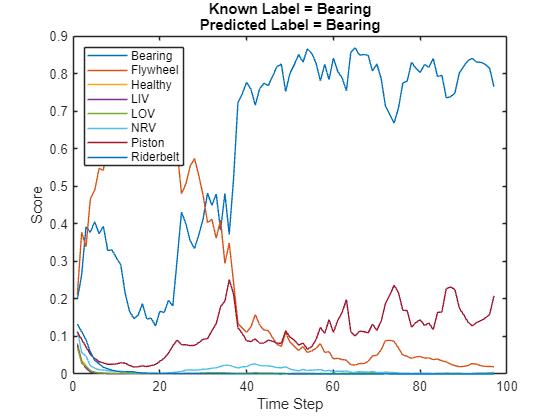

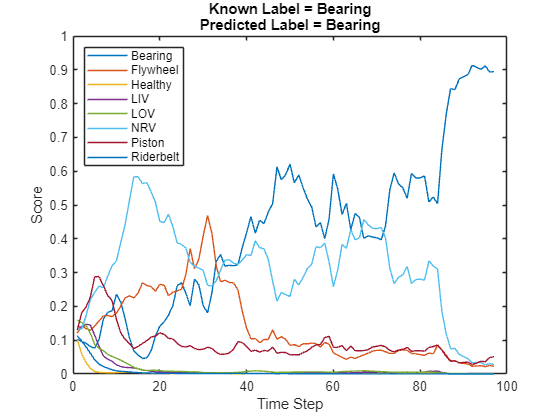

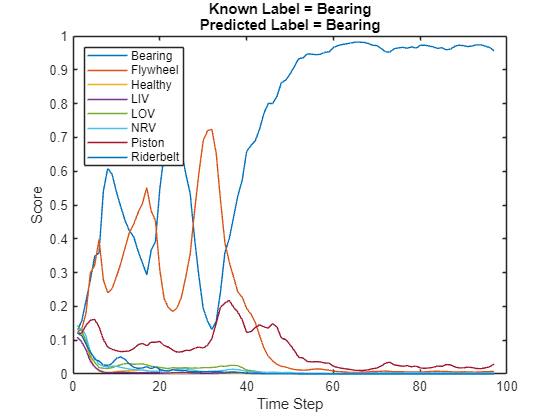

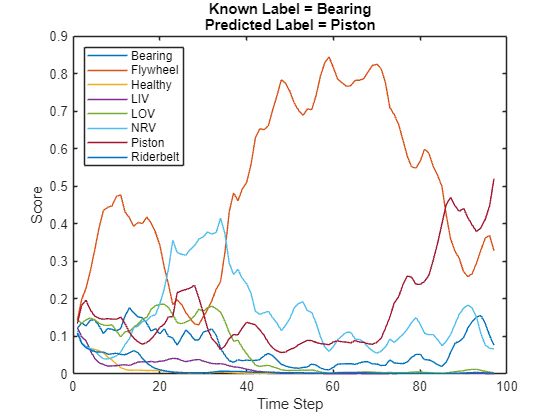

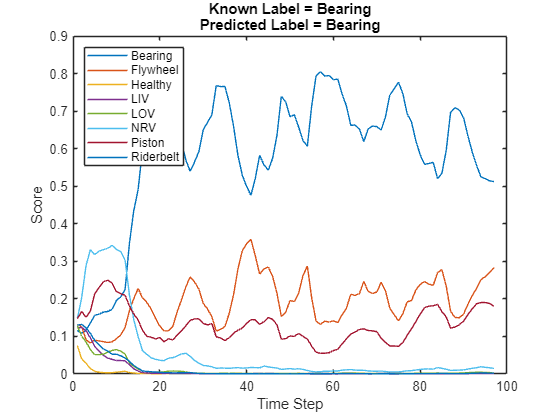

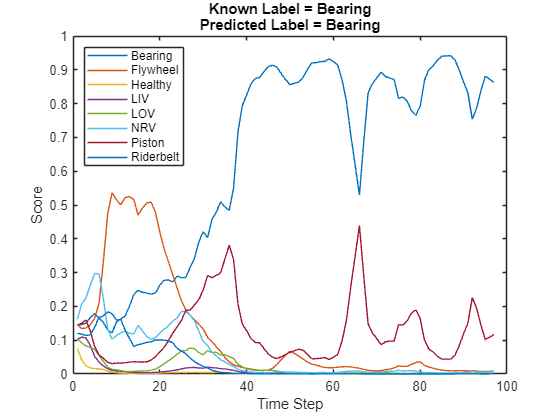

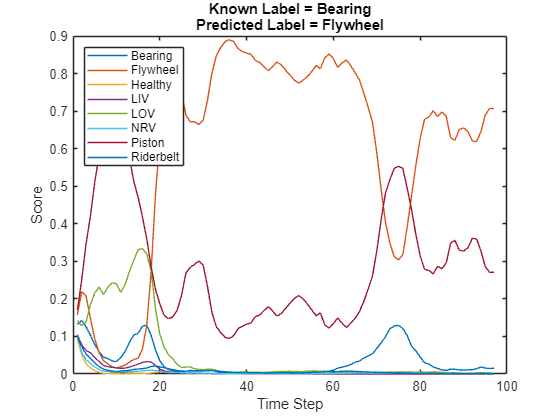

Test Streaming Loop

Next, you test the streaming classifier in MATLAB. Stream audio one frame at a time to represent a system as it would be deployed in a real-time embedded system. This enables you to measure and visualize the timing and accuracy of the streaming implementation.

Stream in several audio files and plot the output classification results for each frame of data. At a time interval equal to the length of each file, evaluate the output of the classifier.

reset(adsValidation) N = 10; labels = categories(labelsValidation); numLabels = numel(labels); % Create a dsp.AsyncBuffer to read audio in a streaming fashion audioSource = dsp.AsyncBuffer; % Create a dsp.AsyncBuffer to accumulate scores scoreBuffer = dsp.AsyncBuffer; % Create a dsp.AsyncBuffer to record execution time. timingBuffer = dsp.AsyncBuffer; % Pre-allocate array to store results streamingResults = categorical(zeros(N,1)); % Loop over files for fileIdx = 1:N % Read one audio file and put it in the source buffer [data,dataInfo] = read(adsValidation); write(audioSource,data); % Inner loop over frames rs = true; while audioSource.NumUnreadSamples >= windowLength % Get a frame of audio data x = read(audioSource,windowLength); % Apply streaming classifier function tic score = recognizeAirCompressorFault(x,rs); write(timingBuffer,toc); % Store score for analysis write(scoreBuffer,extractdata(score)'); rs = false; end reset(audioSource) % Store class result for that file scores = read(scoreBuffer); result = scores2label(scores(end,:),uniqueLabels,2); streamingResults(fileIdx) = result; % Plot scores to compare over time figure plot(scores) %#ok<*NASGU> legend(uniqueLabels,Location="northwest") xlabel("Time Step") ylabel("Score") title(["Known Label = " + string(labelsValidation(fileIdx)),"Predicted Label = " + string(streamingResults(fileIdx))]) end

Compare the test results for the streaming version of the classifier and the non-streaming.

testError = mean(validationResults(1:N) ~= streamingResults); disp("Error between streaming classifier and non-streaming: " + testError*100 + " (%)")

Error between streaming classifier and non-streaming: 0 (%)

Analyze the execution time. The execution time when state is reset is often above the 32 ms budget. However, in a real, deployed system, that initialization time will only be incurred once. The execution time of the main loop is around 10 ms, which is well below the 32 ms budget for real-time performance.

executionTime = read(timingBuffer)*1000; budget = (windowLength/afe.SampleRate)*1000; plot(executionTime(2:end),"o") title("Execution Time Per Frame") xlabel("Frame Number") ylabel("Time (ms)") yline(budget,"-","Budget",LineWidth=2)

Supporting Functions

function [augData,augLabels] = mixup(data,labels,numMixesPerInstance) augData = cell(numel(data)*numMixesPerInstance,1); augLabels = repelem(labels,numMixesPerInstance); kk = 1; for ii = 1:numel(data) for jj = 1:numMixesPerInstance lambda = max(min((randn./10)+0.5,1),0); % Find all available data with different labels. availableData = find(labels~=labels(ii)); % Randomly choose one of the available data with a different label. numAvailableData = numel(availableData); idx = randi([1,numAvailableData]); % Mix. augData{kk} = lambda*data{ii} + (1-lambda)*data{availableData(idx)}; % Specify the label as randomly set by lambda. if lambda < rand augLabels(kk) = labels(availableData(idx)); else augLabels(kk) = labels(ii); end kk = kk + 1; end end end

References

[1] Verma, Nishchal K., et al. "Intelligent Condition Based Monitoring Using Acoustic Signals for Air Compressors." IEEE Transactions on Reliability, vol. 65, no. 1, Mar. 2016, pp. 291–309. DOI.org (Crossref), doi:10.1109/TR.2015.2459684.

[2] Huszar, Ferenc. "Mixup: Data-Dependent Data Augmentation." InFERENCe. November 03, 2017. Accessed January 15, 2019. https://www.inference.vc/mixup-data-dependent-data-augmentation/.