Preparazione della rete per il transfer learning con Deep Network Designer

Questo esempio mostra come preparare una rete per il transfer learning in modo interattivo utilizzando l'app Deep Network Designer.

Il transfer learning consiste nel prendere una rete preaddestrata di Deep Learning e perfezionarla per apprendere una nuova attività. Il transfer learning è di solito più veloce e semplice rispetto all'addestramento di una rete da zero. È possibile trasferire rapidamente le feature apprese a una nuova attività utilizzando un numero minore di dati.

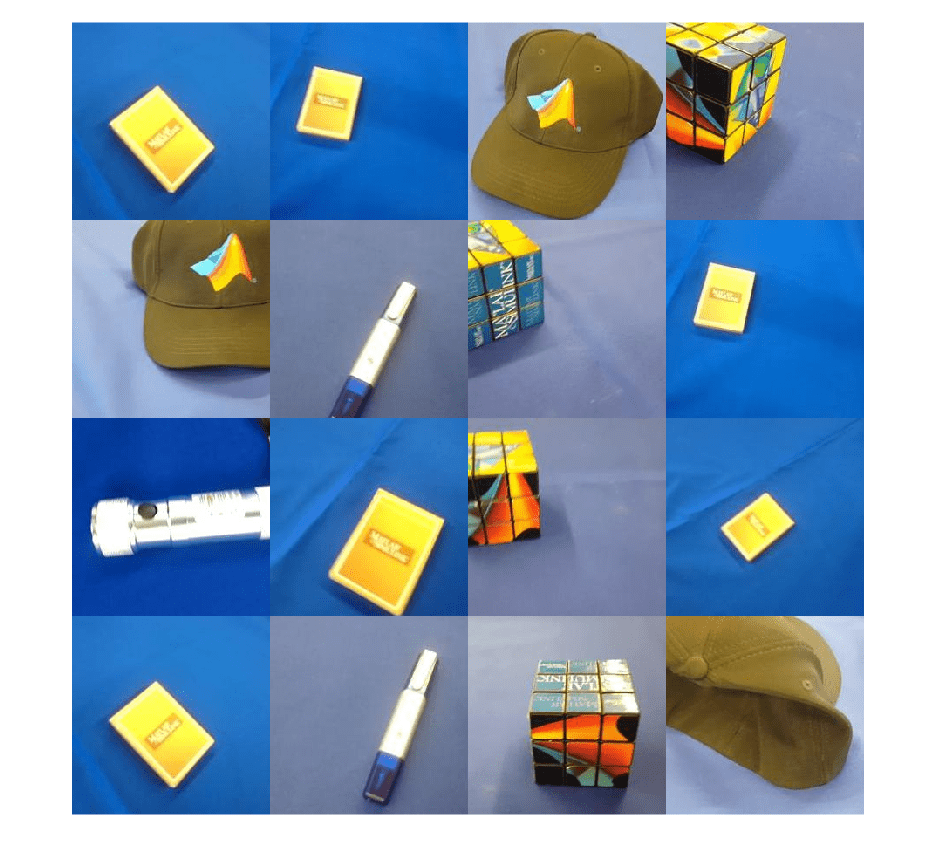

Estrazione dei dati

Estrarre il set di dati MathWorks Merch. Si tratta di un piccolo set di dati contenente 75 immagini di merchandising MathWorks, appartenenti a cinque diverse categorie (berretto, cubo, carte da gioco, cacciavite e torcia). I dati sono disposti in modo che le immagini si trovino in sottocartelle corrispondenti a queste cinque classi.

folderName = "MerchData"; unzip("MerchData.zip",folderName);

Creare un datastore di immagini. Un datastore di immagini consente di memorizzare grandi raccolte di dati delle immagini, compresi quelli che non entrano nella memoria e di leggere in modo efficiente i batch delle immagini durante l'addestramento di una rete neurale. Specificare la cartella con le immagini estratte e specificare che i nomi delle sottocartelle corrispondono alle etichette delle immagini.

imds = imageDatastore(folderName, ... IncludeSubfolders=true, ... LabelSource="foldernames");

Visualizzare alcune immagini di esempio.

numImages = numel(imds.Labels); idx = randperm(numImages,16); I = imtile(imds,Frames=idx); figure imshow(I)

Estrarre i nomi e il numero delle classi.

classNames = categories(imds.Labels); numClasses = numel(classNames)

numClasses = 5

Suddividere i dati in set di dati di addestramento, convalida e test. Utilizzare il 70% delle immagini per l'addestramento, il 15% per la convalida e il 15% per i test. La funzione splitEachLabel divide il datastore delle immagini in due nuovi datastore.

[imdsTrain,imdsValidation,imdsTest] = splitEachLabel(imds,0.7,0.15,0.15,"randomized");Selezione di una rete preaddestrata

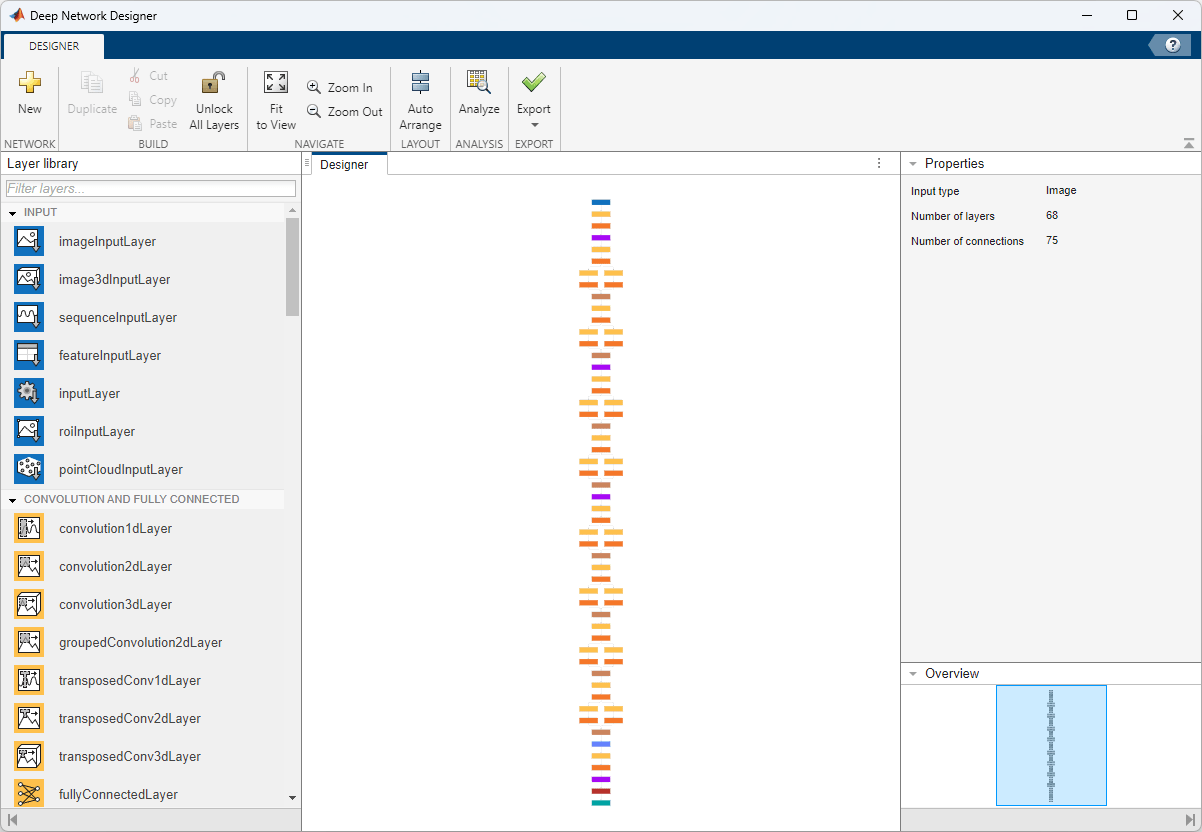

Per aprire Deep Network Designer, nella scheda Apps (App), in Machine Learning and Deep Learning (Machine Learning e Deep Learning), fare clic sull’icona dell’app. In alternativa, è possibile aprire l’applicazione dalla riga di comando:

deepNetworkDesigner

Deep Network Designer fornisce una selezione di reti di classificazione delle immagini preaddestrate che hanno appreso ricche rappresentazioni di feature per un’ampia gamma di immagini. Il Transfer learning funziona meglio se le immagini sono simili a quelle usate originariamente per addestrare la rete. Se le immagini di addestramento sono immagini naturali come quelle nel database di ImageNet, una qualsiasi delle reti preaddestrate è adatta. Per un elenco delle reti disponibili e per il loro confronto, vedere Reti neurali profonde preaddestrate.

Se i dati sono molto diversi da quelli di ImageNet, ad esempio in caso di immagini minuscole, spettrogrammi o dati non di immagine, potrebbe essere meglio addestrare una nuova rete. Per un esempio che mostra come addestrare una rete da zero, vedere Come iniziare con le previsioni delle serie temporali.

SqueezeNet non richiede un pacchetto di supporto ulteriore. Per altre reti preaddestrate, se non si dispone del pacchetto di supporto necessario installato, l’applicazione fornisce l’opzione Install (Installa).

Seleziona SqueezeNet dall’elenco di reti preaddestrate e fai clic su Open (Aprire).

Scoperta della rete

Deep Network Designer visualizza una vista ridotta dell’intera rete nel pannello Designer.

Scopri il grafico della rete. Per ingrandire l’immagine con il mouse, usa la combinazione Ctrl + rotellina di scorrimento. Per ottenere una panoramica, usa i tasti freccia oppure tieni premuta la rotellina di scorrimento e trascina il mouse. Seleziona un livello per visualizzarne le proprietà. Deseleziona tutti i livelli per visualizzare il riepilogo della rete nel pannello Properties (Proprietà).

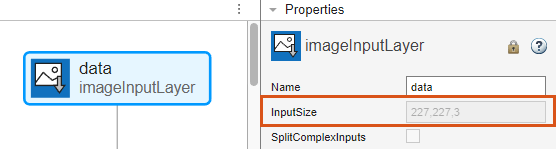

Selezionare il livello di input dell'immagine 'input'. Come si può notare, la dimensione di input per questa rete è di 227x227x3 pixel.

Salvare la dimensione dell'input nella variabile inputSize.

inputSize = [227 227 3];

Preparazione della rete per l’addestramento

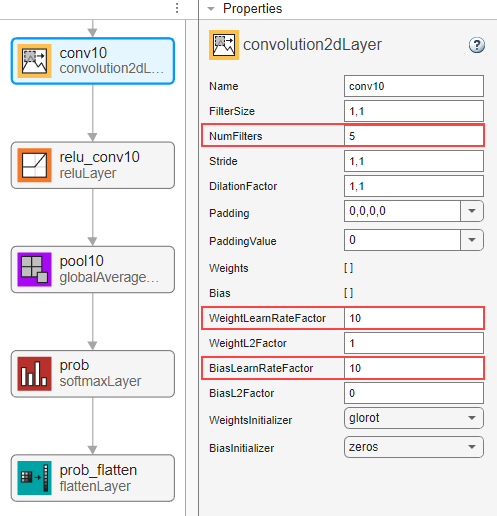

Per utilizzare una rete preaddestrata per il transfer learning, è necessario modificare il numero di classi affinché corrisponda al nuovo set di dati. Per prima cosa, individuare l'ultimo livello apprendibile della rete. Per SqueezeNet, l'ultimo livello apprendibile è l’ultimo livello convoluzionale denominato 'conv10'. Selezionare il livello 'conv10'. Nella parte inferiore del pannello Properties (Proprietà), fare clic su Unlock Layer (Sblocca livello). Nella finestra di dialogo che mostra l'avviso, fare clic su Unlock Anyway (Sblocca comunque). Questo sblocca le proprietà del livello in modo da poterle adattare alla nuova attività.

Prima della release R2023b: Per adattare la rete ai nuovi dati, è necessario sostituire i livelli anziché sbloccarli. Nel nuovo livello convoluzionale bidimensionale, impostare FilterSize su [1 1].

La proprietà NumFilters definisce il numero di classi per i problemi di classificazione. Cambiare NumFilters sul numero di classi nei nuovi dati, in questo esempio 5.

Modificare le velocità di apprendimento in modo tale che l'apprendimento nel nuovo livello sia più veloce che nei livelli trasferiti impostando WeightLearnRateFactor e BiasLearnRateFactor su 10.

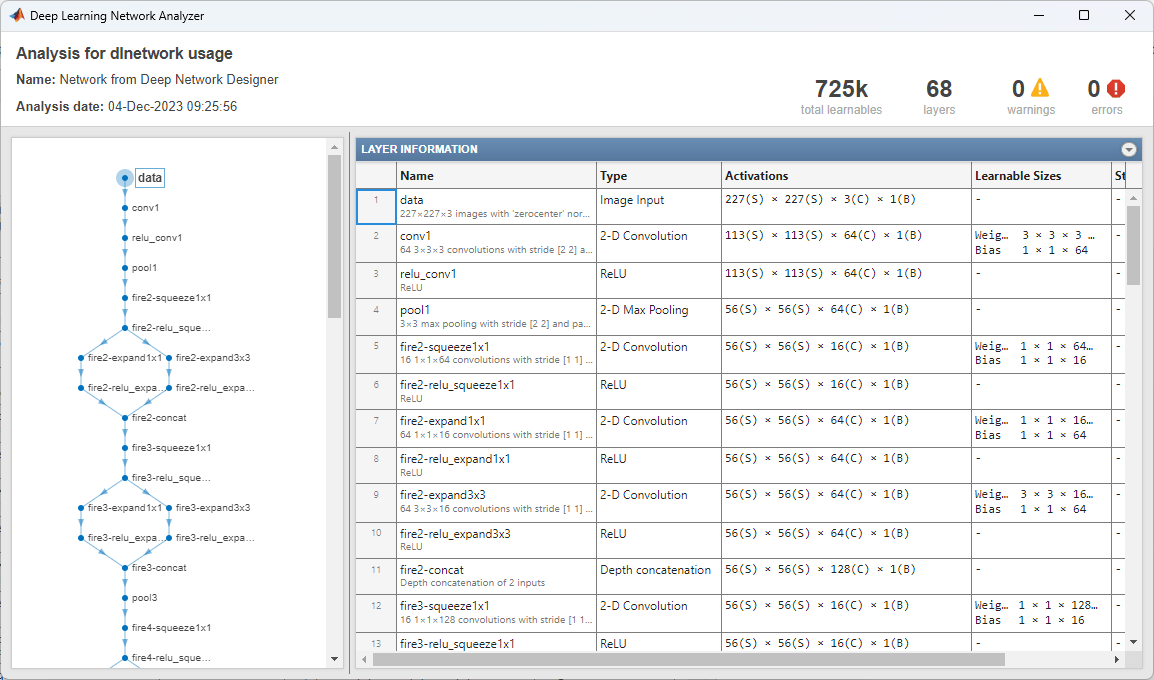

Verifica della rete

Per verificare che la rete sia pronta per l’addestramento, fare clic su Analyze (Analizza). Il Deep Learning Network Analyzer non riporta errori o avvisi, quindi la rete è pronta per l'addestramento. Per esportare la rete, fare clic su Export (Esporta). L'applicazione salva la rete nella variabile net_1.

Preparazione dei dati per l'addestramento

Le immagini nel datastore possono avere dimensioni diverse. Per ridimensionare automaticamente le immagini di addestramento, utilizzare un datastore di immagini aumentate. L'aumento dei dati aiuta inoltre la rete ad evitare l'overfitting e a memorizzare i dettagli esatti delle immagini di addestramento. Specificare queste ulteriori operazioni di aumento da eseguire sulle immagini di addestramento: capovolgere casualmente le immagini di addestramento lungo l'asse verticale e traslarle casualmente fino a 30 pixel in orizzontale e verticale.

pixelRange = [-30 30]; imageAugmenter = imageDataAugmenter( ... RandXReflection=true, ... RandXTranslation=pixelRange, ... RandYTranslation=pixelRange); augimdsTrain = augmentedImageDatastore(inputSize(1:2),imdsTrain, ... DataAugmentation=imageAugmenter);

Per ridimensionare automaticamente le immagini di convalida e test senza eseguire un ulteriore aumento dei dati, utilizzare un datastore di immagini aumentate senza specificare alcuna ulteriore operazione di pre-elaborazione.

augimdsValidation = augmentedImageDatastore(inputSize(1:2),imdsValidation); augimdsTest = augmentedImageDatastore(inputSize(1:2),imdsTest);

Specificazione delle opzioni di addestramento

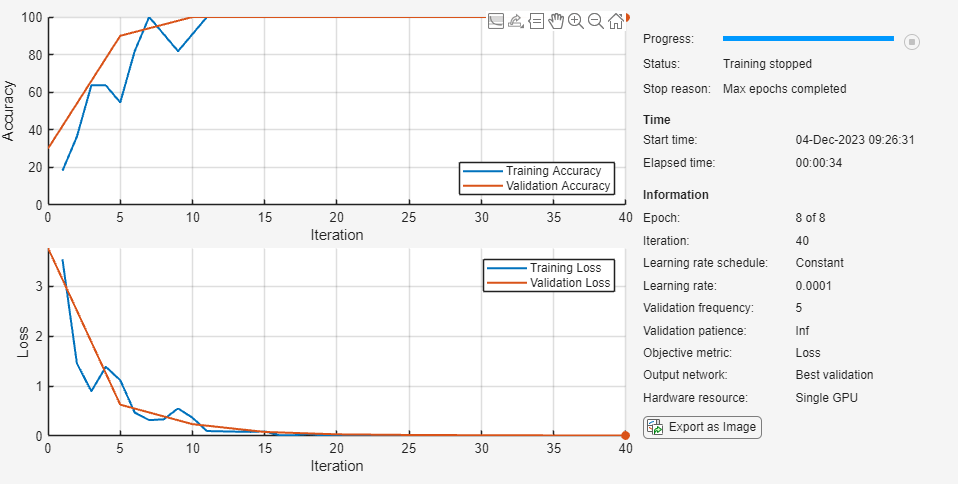

Specificare le opzioni di addestramento. La scelta tra le opzioni richiede un'analisi empirica. Per scoprire le diverse configurazioni delle opzioni di addestramento eseguendo esperimenti, è possibile utilizzare l'applicazione Experiment Manager.

Addestrare utilizzando l'ottimizzatore Adam.

Imposta la velocità di apprendimento inziale su un valore piccolo per rallentare l’apprendimento nei livelli trasferiti.

Specificare un numero di epoche ridotto. Un'epoca è un ciclo di addestramento completo sull'intero set di dati di addestramento. Per il transfer learning non è necessario l’addestramento per un numero elevato di epoche.

Specificare i dati e la frequenza di convalida in modo tale che la precisione sui dati di convalida venga calcolata una volta ogni epoca.

Specificare la dimensione del mini-batch, ossia quante immagini utilizzare in ogni iterazione. Per garantire che l’intero set di dati sia utilizzato ad ogni epoca, impostare la dimensione del mini-batch per suddividere in modo uniforme il numero di campioni di addestramento.

Visualizzare l'andamento dell'addestramento in un grafico e monitorare la metrica di precisione.

Disattivare l’output verboso.

options = trainingOptions("adam", ... InitialLearnRate=0.0001, ... MaxEpochs=8, ... ValidationData=imdsValidation, ... ValidationFrequency=5, ... MiniBatchSize=11, ... Plots="training-progress", ... Metrics="accuracy", ... Verbose=false);

Addestramento di reti neurali

Addestrare la rete neurale utilizzando la funzione trainnet. Per le attività di classificazione, utilizzare la perdita di entropia incrociata. Per impostazione predefinita, la funzione trainnet utilizza una GPU, se disponibile. L'utilizzo di una GPU richiede una licenza Parallel Computing Toolbox™ e un dispositivo GPU supportato. Per informazioni sui dispositivi supportati, vedere GPU Computing Requirements (Parallel Computing Toolbox). In caso contrario, la funzione trainnet utilizza la CPU. Per specificare l'ambiente di esecuzione, utilizzare l'opzione di addestramento ExecutionEnvironment.

net = trainnet(imdsTrain,net_1,"crossentropy",options);

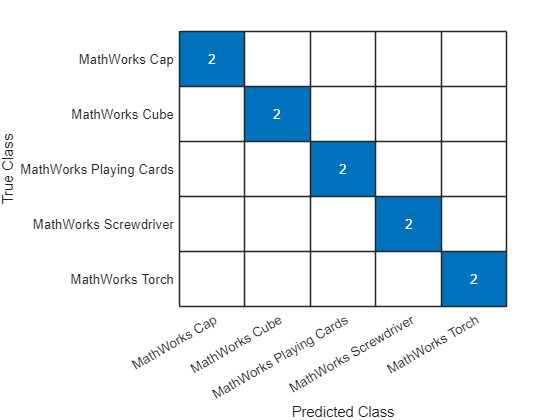

Test della rete neurale

Classificare le immagini di test. Per fare previsioni con osservazioni multiple, utilizzare la funzione minibatchpredict. Per convertire i punteggi di previsione in etichette, utilizzare la funzione scores2label. La funzione minibatchpredict utilizza automaticamente una GPU, se disponibile.

YTest = minibatchpredict(net,augimdsTest); YTest = scores2label(YTest,classNames);

Visualizzare la precisione della classificazione in un grafico di confusione.

TTest = imdsTest.Labels; figure confusionchart(TTest,YTest);

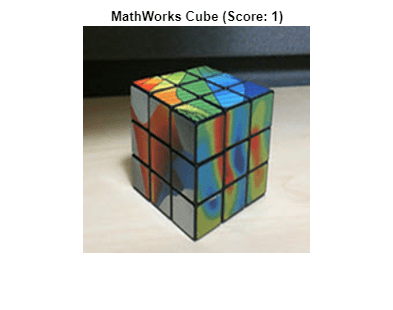

Formulazione di previsioni con i nuovi dati

Classificare un'immagine. Leggere un'immagine da un file JPEG, ridimensionarla e convertirla in un singolo tipo di dati.

im = imread("MerchDataTest.jpg");

im = imresize(im,inputSize(1:2));

X = single(im);Classificare l'immagine. Per fare una previsione con una singola osservazione, utilizzare la funzione predict. Per utilizzare una GPU, convertire prima i dati in gpuArray.

if canUseGPU X = gpuArray(X); end scores = predict(net,X); [label,score] = scores2label(scores,classNames);

Visualizzare l'immagine con l'etichetta prevista e il punteggio corrispondente.

figure imshow(im) title(string(label) + " (Score: " + gather(score) + ")")