Quantizzazione, proiezione e sfoltimento

Utilizzare Deep Learning Toolbox™ insieme al pacchetto di supporto Deep Learning Toolbox Model Quantization Library per ridurre l’ingombro della memoria e i requisiti di calcolo di una rete neurale profonda tramite:

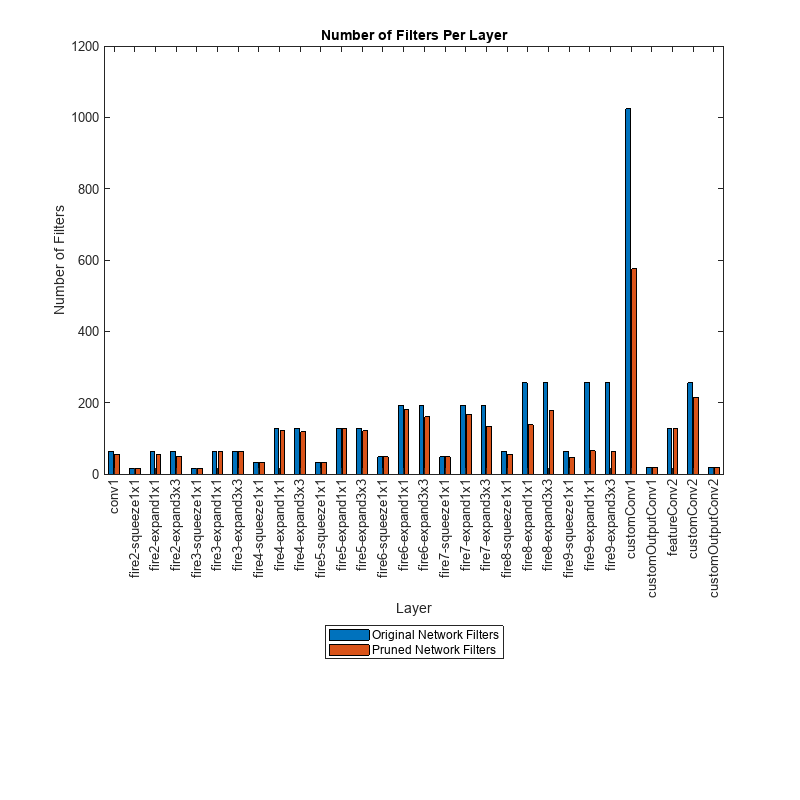

Sfoltimento dei filtri dai livelli convoluzionali utilizzando l'approssimazione di Taylor del primo ordine. È quindi possibile generare codice C/C++ o CUDA® da questa rete sfoltita.

Proiezione dei livelli eseguendo l'analisi dei componenti principali (PCA) sulle attivazioni del livello utilizzando un set di dati rappresentativo dei dati di addestramento e applicando le proiezioni lineari sui parametri apprendibili del livello. I passaggi in avanti di una rete neurale profonda proiettata sono in genere più rapidi quando si distribuisce la rete su hardware integrato utilizzando la generazione di codice C/C++ senza librerie.

Quantizzazione dei pesi, dei bias e attivazioni di livelli in tipi di dati interi scalati a precisione ridotta. È quindi possibile generare codice C/C++, CUDA o HDL da questa rete quantizzata per la distribuzione su GPU, FPGA o CPU.

Per una panoramica dettagliata delle tecniche di compressione disponibili in Deep Learning Toolbox Model Quantization Library, vedere Reduce Memory Footprint of Deep Neural Networks.

Funzioni

App

| Deep Network Quantizer | Quantize deep neural network to 8-bit scaled integer data types |

Argomenti

Panoramica

- Reduce Memory Footprint of Deep Neural Networks

Learn about neural network compression techniques, including pruning, projection, and quantization.

Sfoltimento

- Analyze and Compress 1-D Convolutional Neural Network

Analyze 1-D convolutional network for compression and compress it using Taylor pruning and projection. (Da R2024b) - Parameter Pruning and Quantization of Image Classification Network

Use parameter pruning and quantization to reduce network size. - Prune Image Classification Network Using Taylor Scores

This example shows how to reduce the size of a deep neural network using Taylor pruning. - Prune Filters in a Detection Network Using Taylor Scores

This example shows how to reduce network size and increase inference speed by pruning convolutional filters in a you only look once (YOLO) v3 object detection network. - Prune and Quantize Convolutional Neural Network for Speech Recognition

Compress a convolutional neural network (CNN) to prepare it for deployment on an embedded system.

Proiezione e distillazione della conoscenza

- Compress Neural Network Using Projection

This example shows how to compress a neural network using projection and principal component analysis. - Evaluate Code Generation Inference Time of Compressed Deep Neural Network

This example shows how to compare the inference time of a compressed deep neural network for battery state of charge estimation. (Da R2023b) - Train Smaller Neural Network Using Knowledge Distillation

This example shows how to reduce the memory footprint of a deep learning network by using knowledge distillation. (Da R2023b)

Quantizzazione

- Quantization of Deep Neural Networks

Overview of the deep learning quantization tools and workflows. - Quantization Workflow Prerequisites

Products required for the quantization of deep learning networks. - Prepare Data for Quantizing Networks

Supported datastores for quantization workflows. - Quantize Multiple-Input Network Using Image and Feature Data

Quantize Multiple Input Network Using Image and Feature Data - Export Quantized Networks to Simulink and Generate Code

Export a quantized neural network to Simulink and generate code from the exported model.

Quantizzazione per il target GPU

- Generate INT8 Code for Deep Learning Networks (GPU Coder)

Quantize and generate code for a pretrained convolutional neural network. - Quantize Residual Network Trained for Image Classification and Generate CUDA Code

This example shows how to quantize the learnable parameters in the convolution layers of a deep learning neural network that has residual connections and has been trained for image classification with CIFAR-10 data. - Quantize Layers in Object Detectors and Generate CUDA Code

This example shows how to generate CUDA® code for an SSD vehicle detector and a YOLO v2 vehicle detector that performs inference computations in 8-bit integers for the convolutional layers. - Quantize Semantic Segmentation Network and Generate CUDA Code

Quantize Convolutional Neural Network Trained for Semantic Segmentation and Generate CUDA Code

Quantizzazione per il target FPGA

- Quantize Network for FPGA Deployment (Deep Learning HDL Toolbox)

Reduce the memory footprint of a deep neural network by quantizing the weights, biases, and activations of convolution layers to 8-bit scaled integer data types. - Classify Images on FPGA Using Quantized Neural Network (Deep Learning HDL Toolbox)

This example shows how to use Deep Learning HDL Toolbox™ to deploy a quantized deep convolutional neural network (CNN) to an FPGA. - Classify Images on FPGA by Using Quantized GoogLeNet Network (Deep Learning HDL Toolbox)

This example shows how to use the Deep Learning HDL Toolbox™ to deploy a quantized GoogleNet network to classify an image.

Quantizzazione per il target CPU

- Generate int8 Code for Deep Learning Networks (MATLAB Coder)

Quantize and generate code for a pretrained convolutional neural network. - Generate INT8 Code for Deep Learning Network on Raspberry Pi (MATLAB Coder)

Generate code for deep learning network that performs inference computations in 8-bit integers.