Understanding the Particle Filter | Autonomous Navigation, Part 2

From the series: Navigazione autonoma

Brian Douglas, MathWorks

This video presents a high-level understanding of the particle filter and shows how it can be used in Monte Carlo Localization to determine the pose of a mobile robot inside a building.

We’ll cover why the particle filter is better suited for this type of problem than the traditional Kalman filter because of its ability to handle non-Gaussian probability distributions.

Published: 15 Jul 2020

In the first video, we talk about what autonomous navigation is and how if can be applied to different types of autonomous vehicles. In this video, we’re going to look at one part of the autonomous navigation problem and show how you can estimate the position and orientation of a mobile robot using a particle filter. We’re going to go through the same localization approach as demonstrated the MATLAB example, Localize TurtleBot using Monte Carlo Localization. This example simulates a TurtleBot moving around in an office building, taking measurements of the environment and estimating it’s location. If you’re not familiar with a particle filter it may be confusing as to why the solution has a bunch of blue dots appearing and jumping around each time step, and then somehow converging on the real location of the TurtleBot. What is the filter doing and how does this seemingly random set of dots help us determine the location of a robot? That’s what we’re going to cover, so I hope you stick around for it. I’m Brian, and welcome to a MATLAB Tech Talk.

Let’s set up the localization problem. We have a robot, the TurtleBot, that is wandering around inside an office building. The robot is given a map of the building, so it knows the general layout of the walls and furniture but it doesn’t know initially where it is within the map - it could be anywhere. Therefore, the problem is that the robot needs to use its sensors and motion models to estimate its pose - that is determine its position and orientation within the building.

This robot has a Lidar sensor that returns the distance to objects within the field of view. So, if the sensor is looking at a corner and two doors, it might return a point cloud of dots that look like this. But measurements are noisy so it actually might look something like this. Now we can match this pattern to the map of the environment and determine where the robot is.

Now, in addition to a noisy measurement, the robot also has a way to deadreckon its position using odometry data. Deadreckoning is when a future position is calculated using a past position and relative measurements, like velocity and angular rate. So if you know the robot is facing north and moving at 1 meter per second, after 3 seconds you can estimate that the position is 3 meters North of where it used to be and you can have some confidence this is the case without having to check the environment again. Deadreckoning can be used over shorter time frames with a lot of success but due to noise in the relative measurements, over time the error grows and needs to be corrected by measuring where it is relative to the environment. For a more in depth understanding of deadreckoning, check out sensor fusion and tracking video number 3, linked below, where we combine the absolute measurement from a GPS with the relative measurement from an IMU.

So we have two possible ways to determine location; we have a noisy lidar sensor that senses features that we can compare to a map of the environment and we have noisy odometry data that estimates how the robot is moving. Now, if you are familiar with the Kalman filter, you might recognize that blending a noisy measurement with a noisy process model is exactly what it’s used for.

And if you’re not familiar with Kalman filters, you can get up to speed by watching the MATLAB tech talk series on them that I’ve linked to in the description below, but you shouldn’t need too much experience with them to understand the rest of this video.

One drawback of a Kalman filter that is going to be important for us is that it expects probability distributions to be Gaussian. So, for our problem the random deadreckoning noise needs to be Gaussian and the lidar measurement noise needs to be Gaussian. Which both might actually be, or at least close enough to still use a Kalman filter. But importantly, the probability distribution of the estimated state of the robot must also be Gaussian, which we’ll see in just a bit is definitely not the case for our localization example. Therefore, we need an estimation filter that can handle non Gaussian probability distributions. And that’s where the particle filter comes in.

To understand how the particle filter is going to solve our localization this problem we should try to imagine ourselves as the robot and figure out how we would use lidar to determine where we are on a very simple map. At the beginning, we have no clue where we are in the room. We might say that it is equally probable that we are anywhere on the map and facing any direction. Now, we measure the scene with the lidar sensor and receive the result. It looks like we’re looking at a wall since all of the measurements are basically in a line and from the measurement we can tell about how far away we are from the wall. We can’t tell from this alone our exact location because there are many walls in this room, and we might be facing any one of them, or anywhere along each of the walls. But we can tell that we’re probably not looking at a corner or the door, and we’re probably not in the middle of the room since the wall is so close.

So, this first measurement constrains us to maybe one of these positions and orientations. However, since the sensor has some noise and error, we might claim that we could be a little closer or further away than the measurement states. So, we can expand the possible locations accordingly and say that we have the most confidence that we’re in one of these locations. Now, we can’t say for sure that we’re not in the middle of the room since it’s possible that we’re looking at a temporary flat obstacle that wasn’t on the map. Likely it’s a lower probability that that’s the case, but still possible.

Therefore, after this first measurement, we may say that the probability distribution of where we are looks something like this. With the brighter yellow locations meaning higher probability, and the darker locations are lower probability. This is already a very non Gaussian distribution so, you can see how easily we can get ourselves into a non Gaussian situation when we’re dealing with localization like this.

Ok, so intuitively, it makes sense that we can’t be certain where we are from this single measurement. But let’s say that this is where we actually are within the room. Again, we’re still just looking at this flat featureless wall. As a person, it makes sense that if we moved around to see different features, we could start to narrow in on exactly where we are by removing the locations that don’t satisfy all of the measurements we see along the way. So, if we turn to the left and see a corner, then keep turning and see another corner, and then we see the door, and we would be able to use our memory to start to piece together where we are on the map based on what we’ve seen, which are the lidar the measurements, and the estimate of how we moved through the environment - which is direction we turned and about how far we walked.

There is only one place on this map where a motion like this will see exactly two corners and then end at a door. So this must have been the path we took and this must be where we are now.

So, this all makes intuitive sense, but how can we code this kind of understanding for our robot? How can it remember multiple successive measurements in a way that allows it to hone in on its exact location over time? Well as you probably expect, since I already told you, one popular way is with a particle filter.

We’re going to walk through a really basic understanding of how particles filters work. The goal of this section is to give you some intuition about the filter and not to go too in depth into any of the mathematics. If you want more details about the filter, I’ve left some great resources in the description. Alright, let’s get started.

Remember, at the start the robot has a map of the room but nothing else and so there is equal probability that the robot is anywhere on the map and facing any direction. So, rather than trying to capture this probability with some smoothly defined function, we can approximate the probability distribution by randomly generating a number of discrete poses. Here I’m uniformly randomizing the x and y location and uniformly randomizing the orientation using 50 different poses. This captures the uniform probability distribution across the entire map.

Each one of these poses, or particles, is saved in the filter as a possible estimate of the state. The more particles, the closer they represent the true probability distribution, but the more time it takes to do all of the calculations. So, there is a trade off there between accuracy and speed. For this thought exercise, 50 particles will be enough, but you may need hundreds or thousands depending on size of the map and the shape of the probability distribution you’re trying to represent.

Now, a bunch of random guesses of system state doesn’t seem like it helps us much since so many of them clearly have to be wrong. But we can start to remove some of the wrong guesses because at this point we get a lidar measurement and it returns, say a pattern that looks like a wall. Now, the filter can go through each of our particles one by one and determine using the map, what the lidar sensor would have returned if that pose was the real one. Then it can compare the two scenes and determine how likely it is that that particle is the real system state. The closer the scenes match, the higher the probability. For example, this particle would return a scene that is much close to what we saw than say, this particle would.

I’ll enlarge the particles that have a high probability of being correct so we can see them better, and I’ll shrink the particles that have a lower probability. So, as we saw before after just a single measurement our probability distribution is no longer uniform, but is skewed toward these more probable poses.

Now, we don’t want to keep all of the low probability particles in the filter since that would use up computational resources on unlikely poses and we could also get into a situation where they cause the filter to never converge. Therefore, we’ll resample the particles according to the new probability distribution. This will place more particles in the poses that are more likely and less particles everywhere else. This random discrete resampling of the probability distribution is why this type of localization is also referred to as Monte Carlo Localization.

At this point, the robot is moving through the room, it’s following the circular path that I showed earlier, and it’s dead reckoning its relative position through onboard odometry. So the robot has a sense of how it’s moving between lidar measurements. We can apply this estimated motion to every single particle in the filter. Basically, we’re predicting where each of our possible poses go if they moved in the same relative way as the robot.

Of course, I mentioned that deadreckoning is a noisy process so we have some level of uncertainty in the estimated motion from the robot. Therefore, we don’t propagate each particle exactly with the estimated motion, but instead add additional random motion according to the process noise. Some particles might move a little faster, some a little slower, some turn more and others less. So, here again, if the process noise is non Gaussian, the particle filter can handle that, at least as long as there are enough particles to accurate represent the distribution.

Now, we can do the same step as before. We measure the scene with the lidar sensor, and figure out which of these new particles are most likely to generate a similar measurement. The robot is looking at a corner now which is good because that’s a relatively unique feature in this map. But it doesn’t narrow it down completely. You can see that this particle is looking at a corner and this particle is looking at a different corner. So, we can’t tell just yet which is the real observed corner. Once again I’ll enlarge the most probable particles and shrink the less probable particles. And we can get a sense of how the probability distribution has changed. Now, it’s bi-modal where there are two main possible locations where the robot could be and everywhere else is a much lower probability.

Once again, we can move onto the next generation of particles by generating a new batch a poses according to this probability distribution. But we can start to be a little smarter about it. For the first two generations, I used 50 particles so that I could accurately represent the large and complex distributions. However, now that we’re honing in on the real location of the robot and the distributions are getting narrower it doesn’t take as many particles to fully represent them. We could still use 50, but that would just slow the filter down, possibly without adding too much value. Therefore, there is this idea of adaptive monte carlo localization, or AMCL, which recalculates the number of particles after each generation so that you’re not wasting computational resources. You do have to be careful about going too low with the number of particles because the filter may get over confident in its solution and misrepresent the true probability distribution, resulting in it converging on the wrong pose. So, again, there’s a trade off here. The MATLAB TurtleBot example uses this Adaptive Monte Carlo Localization and there’s a link below if you want to know the details of how this resizing is accomplished.

OK, now each generation is exactly the same as before. We model the motion of the robot using odometry, apply it to the particles, take another lidar measurement, and determine which particles are most likely. Then we resample based on this new probability distribution and start again. And this iterative process is how we can use particles to converge over time on the actual pose of the robot. Even in a room that is as featureless as this rectangle.

So, now if we revisit the MATLAB example from the beginning of this video, it should make a lot more sense as to why these blue dots are here, they are the particles, and why they are moving around each iteration, and why the number of particles is getting smaller as it converges over time. This is the particle filter at work in the adaptive Monte Carlo Localization approach.

Now, I think a good way to get a better feel for how this all works, and how you can implement it in MATLAB is to just play around with this example. You can change some of the filter parameters and see how it impacts the solution and you can check out the different functions that implement each of the ideas that we just talked about. Then, when you go to learn the mathematics behind it, you’ll already have this intuitive feel for how the filter works and hopefully the math becomes easier.

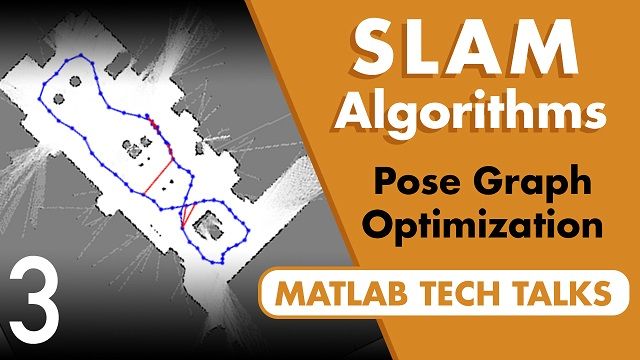

Alright, in this video, we only looked at localization assuming we already had a map that we could trust. But what if that’s not the case? What if we don’t have a map, or if the environment is constantly changing? Well, in the next video we’re going to look at the SLAM problem. Simultaneously doing localization and mapping.

So, if you don’t want to miss future Tech Talk videos, don’t forget to subscribe to this channel. And you want to check out my channel, control system lectures, I cover more control theory topics there as well. Thanks watching, and I’ll see you next time.