Tuning

To learn how to set options using the trainingOptions function, see Set Up Parameters and Train Convolutional Neural Network. After you

identify some good starting options, you can automate sweeping of

hyperparameters or try Bayesian optimization using Experiment

Manager.

Investigate network robustness by generating adversarial examples. You can then use fast gradient sign method (FGSM) adversarial training to train a network robust to adversarial perturbations.

Apps

| Deep Network Designer | Design and visualize deep learning networks |

Objects

trainingProgressMonitor | Monitor and plot training progress for deep learning custom training loops (Since R2022b) |

Functions

trainingOptions | Options for training deep learning neural network |

trainnet | Train deep learning neural network (Since R2023b) |

Topics

- Set Up Parameters and Train Convolutional Neural Network

Learn how to set up training parameters for a convolutional neural network.

- Deep Learning Using Bayesian Optimization

This example shows how to apply Bayesian optimization to deep learning and find optimal network hyperparameters and training options for convolutional neural networks.

- Detect Issues During Deep Neural Network Training

This example shows how to automatically detect issues while training a deep neural network.

- Train Deep Learning Networks in Parallel

This example shows how to run multiple deep learning experiments on your local machine.

- Train Network Using Custom Training Loop

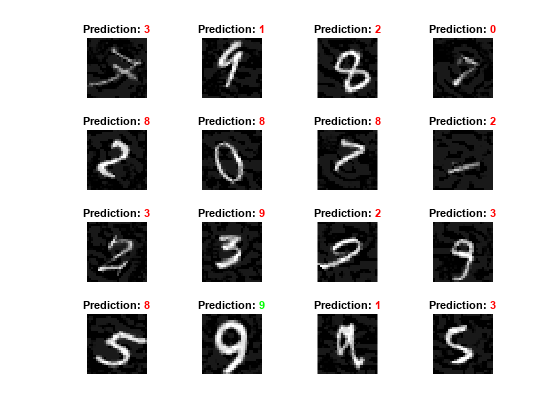

This example shows how to train a network that classifies handwritten digits with a custom learning rate schedule.

- Compare Activation Layers

This example shows how to compare the accuracy of training networks with ReLU, leaky ReLU, ELU, and swish activation layers.

- Deep Learning Tips and Tricks

Learn how to improve the accuracy of deep learning networks.

- Speed Up Deep Neural Network Training

Learn how to accelerate deep neural network training.

- Profile Your Deep Learning Code to Improve Performance

This example shows how to profile deep learning training code to identify and resolve performance issues. (Since R2024b)

- Specify Custom Weight Initialization Function

This example shows how to create a custom He weight initialization function for convolution layers followed by leaky ReLU layers.

- Compare Layer Weight Initializers

This example shows how to train deep learning networks with different weight initializers.

- Create Custom Deep Learning Training Plot

This example shows how to create a custom training plot that updates at each iteration during training of deep learning neural networks using

trainnet. (Since R2023b) - Custom Stopping Criteria for Deep Learning Training

This example shows how to stop training of deep learning neural networks based on custom stopping criteria using

trainnet. (Since R2023b)